Crawl Budget Optimization: How To Get More Pages Crawled

You're publishing content consistently, but Google seems to ignore half your pages. This frustrating disconnect often comes down to one overlooked technical SEO factor: crawl budget optimization. If search engines can't efficiently discover and process your URLs, even well-written content won't rank because it never gets indexed in the first place.

Every website receives a limited amount of attention from search engine crawlers. Large sites with thousands of pages feel this squeeze most acutely, but even smaller sites waste crawl resources on duplicate content, broken links, and low-value URLs. The result? Your most important pages get crawled less frequently, or not at all, while Googlebot burns through its allocation on pages that don't move the needle.

At RankYak, we automate daily content creation and publishing, so we know firsthand how critical it is to get new pages indexed quickly. Publishing great content means nothing if search engines never see it. This guide breaks down exactly how crawl budget works, what factors influence it, and the specific technical fixes you can implement today. By the end, you'll have a clear playbook to direct crawlers toward your priority pages and eliminate the bottlenecks slowing down your indexing.

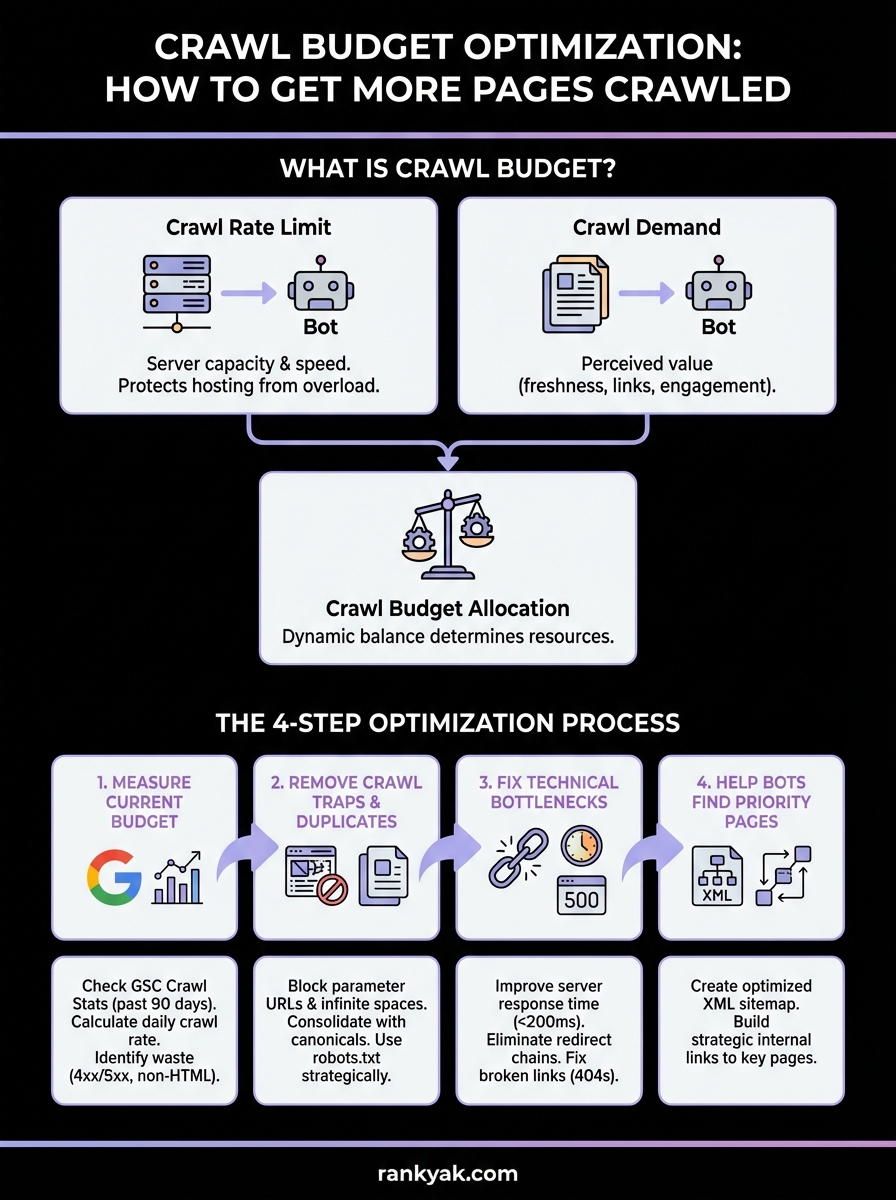

What crawl budget is and when to care

Crawl budget refers to the number of pages search engines will crawl on your site within a specific timeframe. Google assigns this budget based on your site's size, authority, server capacity, and how frequently your content changes. You don't get to set this number directly, but you can influence it through technical optimizations and content strategy. When your site has more URLs than your allocated crawl budget can handle, search engines prioritize which pages to visit and which to skip, potentially leaving your best content unindexed.

The two factors that determine crawl budget

Your crawl budget comes down to two core components that Google balances automatically. The first is crawl rate limit, which protects your server from getting overwhelmed by bot traffic. Google's crawlers slow down if your site starts returning errors or response times spike, ensuring they don't crash your hosting infrastructure. The second component is crawl demand, which reflects how valuable Google thinks your content is to searchers. Sites with fresh content, strong backlink profiles, and high user engagement get crawled more aggressively because Google wants to keep its index current.

Google combines these factors to determine how many resources to allocate to your site, adjusting the crawl rate based on real-time server performance and perceived content value.

These two factors work together in a dynamic system. You might have a high crawl rate limit because your server can handle heavy traffic, but if your content rarely changes and doesn't attract links, crawl demand stays low. Conversely, breaking news sites with constant updates and strong authority get crawled frequently because Google knows readers need the latest information. Understanding this balance helps you focus your crawl budget optimization efforts on the right technical levers.

When crawl budget actually matters

Small sites under 1,000 pages rarely need to worry about crawl budget. Google can easily crawl and index your entire site within days, even if your crawl allocation is modest. Problems arise when you operate large ecommerce stores with tens of thousands of product pages, news sites publishing hundreds of articles daily, or classified sites with user-generated content creating infinite URL variations. In these scenarios, you're fighting for every crawl slot because bots physically can't visit every URL.

You should prioritize crawl budget optimization if you notice newly published pages taking weeks to appear in Google's index, or if Search Console shows thousands of discovered URLs that haven't been crawled yet. Sites that automatically generate parameter-based URLs for filters, pagination, and search results often waste their budget on duplicate content. The same applies if you've recently migrated platforms, restructured your site architecture, or merged multiple domains. These changes create temporary crawl inefficiencies that need immediate attention to restore indexing velocity.

International sites with multiple language versions and large media libraries also benefit from careful crawl budget management. Your Spanish pages might get crawled daily while your German content sits unindexed for months simply because bots exhaust their allocation before reaching those sections. Similarly, image-heavy sites and video platforms can waste crawl resources on media files instead of actual HTML pages that drive rankings. If any of these scenarios match your situation, the techniques in this guide will directly impact how quickly your content reaches searchers.

Step 1. Measure your current crawl budget

You can't optimize what you don't measure. Before making any technical changes, you need baseline data showing how often Google crawls your site and which pages consume your budget. Google Search Console provides this information for free, giving you a detailed breakdown of crawler activity over the past 90 days. This data reveals patterns in bot behavior, identifies sections of your site that get ignored, and quantifies exactly how much room you have for improvement.

Access your crawl stats in Google Search Console

Navigate to Google Search Console and select your property, then click on Settings in the left sidebar. Scroll down to the Crawl Stats section and click "Open report" to access your complete crawl activity dashboard. This report shows you the total number of requests Googlebot made to your site, broken down by response codes, file types, and purpose. You'll see graphs displaying daily crawl patterns, average response times, and the specific URLs that received the most attention.

The report separates crawl requests into categories like Discovery, Refresh, and Other. Discovery requests happen when Googlebot finds new URLs through sitemaps or links. Refresh requests occur when bots revisit existing pages to check for updates. Focus on the total crawl requests per day and your average host response time, as these numbers directly indicate whether server performance limits your crawl budget or if Google simply isn't prioritizing your content.

Calculate your daily crawl rate

Divide your total monthly crawl requests by 30 to get your average daily crawl rate, then compare that number to your total published pages. If Google crawls 3,000 URLs per day but you have 50,000 pages on your site, basic math tells you it takes over two weeks to crawl your entire site once. Sites with fresh content need faster crawl rates to get new pages indexed quickly, so this calculation reveals whether you have a crawl budget problem or not.

Understanding your crawl rate baseline helps you measure the impact of every crawl budget optimization change you make going forward.

Identify crawl budget waste

Look for 4xx and 5xx status codes in your crawl stats report, as these represent wasted crawl requests on broken or server-error pages. Check the "By file type" tab to see if bots spend excessive time crawling CSS, JavaScript, or image files instead of HTML pages. Review the "By response" breakdown to spot redirects, which consume two crawl requests per URL. Export the crawled URLs list and compare it against your sitemap to identify which priority pages Google ignores while wasting time on low-value parameter URLs or outdated content.

Step 2. Remove crawl traps and duplicate URLs

Crawl traps drain your budget by creating infinite URL variations that lead bots down endless rabbit holes of duplicate content. These traps typically come from faceted navigation, session IDs, URL parameters, and calendar-based archives that generate thousands of useless pages. Fixing these issues redirects crawler attention to pages that actually matter for your rankings, letting you reclaim wasted crawl budget for your priority content.

Block parameter URLs and infinite spaces

Your site's search filters, sorting options, and tracking parameters create duplicate versions of the same content under different URLs. An ecommerce site might generate /products?color=red&size=large alongside /products?size=large&color=red, forcing Google to crawl both URLs even though they display identical products. Calendar widgets, pagination links, and user session tokens compound this problem by creating exponentially more URL combinations than actual unique pages on your site.

Identify these patterns by reviewing your crawled URLs in Search Console and looking for question marks, ampersands, and repeating URL structures. Block problematic parameters in Search Console under Settings > Crawling > URL Parameters, where you can tell Google which parameters to ignore. For complete control, add these rules directly to your robots.txt file:

Disallow: /*?

Disallow: /*&

Disallow: /search?

Disallow: /*sessionid=

Blocking parameter-based URLs prevents bots from wasting crawl requests on duplicate content variations that never needed indexing in the first place.

Consolidate duplicate content with canonicals

Canonical tags tell search engines which version of a duplicate page should be indexed when multiple URLs serve similar content. You need these tags on product pages with color variations, blog posts accessible through multiple category archives, and AMP or mobile versions of your desktop pages. Without canonicals, Google treats each duplicate as a separate page and crawls all of them, burning through your allocation unnecessarily.

Add canonical tags in the head section of every page that has duplicates:

<link rel="canonical" href="https://yoursite.com/original-page" />

Point all duplicates to your primary version using absolute URLs, not relative paths. Check your implementation by viewing page source and confirming the canonical points to the URL you want ranking in search results.

Use robots.txt strategically

Your robots.txt file blocks entire sections of your site from being crawled, preserving budget for pages that drive traffic. Target administrative pages, thank-you pages, internal search results, and any URL patterns that serve no SEO value. Be surgical with this approach because blocked pages can't pass link equity or appear in search results.

Add these common exclusions to your robots.txt:

User-agent: Googlebot

Disallow: /admin/

Disallow: /cart/

Disallow: /checkout/

Disallow: /search/

Disallow: /*?print=

Test your robots.txt using Google's robots.txt Tester before publishing to avoid accidentally blocking important pages. Balance protection of your crawl budget with the need to keep valuable content accessible to bots.

Step 3. Fix technical issues that slow crawling

Server performance problems and broken page elements force crawlers to work harder and slower, directly reducing how many pages they can visit during each crawl session. When Googlebot encounters slow response times, redirect chains, and broken links, it adjusts your crawl rate downward to avoid overloading your infrastructure. These technical issues create a negative feedback loop where poor site health leads to reduced crawl frequency, which then delays indexing of your new content and updates to existing pages.

Improve server response time

Your server needs to respond to bot requests in under 200 milliseconds to maintain optimal crawl efficiency. Check your average host response time in Search Console's Crawl Stats report. If you see response times consistently above 500ms, your server becomes the bottleneck limiting how quickly bots can process your pages. Slow servers mean fewer pages crawled per session, directly cutting into your effective crawl budget regardless of how much demand Google has for your content.

Upgrade to faster hosting, implement server-side caching, and optimize your database queries to reduce processing time. Enable compression for HTML, CSS, and JavaScript files to reduce transfer time. Consider using a content delivery network (CDN) to serve static assets from geographically distributed servers, which speeds up bot access from Google's various crawler locations around the world.

Fast server response times let bots crawl more pages in less time, effectively multiplying your crawl budget without requiring Google to allocate more resources.

Eliminate redirect chains

Redirect chains occur when URL A redirects to URL B, which then redirects to URL C, forcing bots to make multiple requests to reach the final destination. Each redirect consumes additional crawl budget and increases the chance that bots abandon the chain before reaching your target page. Review your site for 301 and 302 redirects that point to other redirects instead of directly to the final URL.

Fix redirect chains by updating all links and redirects to point directly to the final destination URL. Run a site crawl using tools from major platforms or manually trace redirect paths by checking HTTP headers. Your redirect rules should look like this:

Redirect 301 /old-page /final-page

NOT: Redirect 301 /old-page /intermediate-page

Fix broken links and 404 errors

Every broken link wastes crawl budget on pages that return 404 status codes instead of serving actual content. Internal links pointing to deleted pages create dead ends that frustrate crawlers and users alike. Export your crawled URLs from Search Console and filter for 4xx errors, then systematically fix each broken link by updating the source page or redirecting the dead URL to relevant replacement content.

Remove or update links pointing to deleted pages, implement 410 status codes for permanently removed content so bots stop attempting to crawl those URLs, and set up 301 redirects for pages you've moved or consolidated. Regular maintenance prevents crawl budget waste accumulating over time.

Step 4. Help bots find and prioritize key pages

Once you've cleaned up crawl waste, you need to actively guide crawlers toward your most valuable content. Search engines discover pages through links and sitemaps, so your crawl budget optimization strategy must include clear pathways that lead bots directly to priority URLs. Without these signals, Google treats all pages equally and may waste resources crawling outdated archives instead of your newest product launches or blog posts that drive conversions.

Create an optimized XML sitemap

Your XML sitemap acts as a roadmap for search engines, listing every URL you want indexed along with metadata about update frequency and priority. Submit a clean sitemap containing only indexable pages that return 200 status codes. Exclude pages blocked by robots.txt, noindexed pages, and redirect URLs that waste crawler attention on content you don't want ranking.

Structure your sitemap to include these essential elements for each URL:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://yoursite.com/priority-page</loc>

<lastmod>2026-02-15</lastmod>

<changefreq>weekly</changefreq>

<priority>1.0</priority>

</url>

</urlset>

Keep individual sitemaps under 50,000 URLs and 50MB uncompressed. Sites exceeding these limits should use sitemap index files that group related content. Update your sitemap immediately when publishing new pages and ping Google through Search Console to trigger faster crawling.

Fresh, accurate sitemaps help crawlers discover your newest content within hours instead of waiting for bots to stumble across links organically.

Build strategic internal links

Internal linking creates crawl paths that distribute link equity and guide bots through your site architecture. Place links to your most important pages in prominent locations like your homepage, main navigation, and footer where crawlers encounter them early in every session. Deeper pages buried five or six clicks from your homepage get crawled infrequently because bots may not reach them before exhausting your allocation.

Add contextual links within your content that connect related topics and reinforce your site's topical authority. New blog posts should link to your core service pages and product categories. Category pages should link to individual items and supporting content that answers common questions. This interconnected structure helps crawlers understand which pages matter most based on how frequently you reference them.

Quick recap and next steps

Crawl budget optimization comes down to four core actions: measure your current crawl rate in Search Console, eliminate crawl traps like parameter URLs and duplicate content, fix technical bottlenecks that slow server response times and create redirect chains, and guide crawlers to your priority pages through strategic sitemaps and internal links. These changes directly impact how quickly Google discovers and indexes your content, turning published pages into rankings instead of leaving them stuck in your server.

Publishing great content means nothing if search engines never find it. RankYak automates daily content creation and automatically handles technical SEO elements like internal linking, sitemap updates, and content structure that keep crawlers coming back. Instead of fighting for every crawl slot manually, our platform ensures your new pages get discovered and indexed efficiently while you focus on growing your business.

Get Google and ChatGPT traffic on autopilot.

Start today and generate your first article within 15 minutes.