Technical SEO Audit Checklist: 18 Steps + Free PDF Download

Your site could be losing 70% of its potential traffic because of technical issues you don't even know exist. Broken redirects. Slow loading pages. Indexing problems. These silent killers prevent search engines from properly crawling, understanding, and ranking your content. Most site owners discover these issues only after their rankings drop or organic traffic plateaus.

A technical SEO audit fixes this. It's a systematic review of your site's backend health, checking everything from crawlability and site speed to mobile usability and internal linking. The best part: you don't need expensive tools or a technical background. You just need a clear checklist that tells you exactly what to look for and how to fix it.

This guide walks you through 18 essential steps to audit your site's technical SEO. You'll learn how to use free tools like Google Search Console and PageSpeed Insights to identify problems, understand what each issue means for your rankings, and get actionable solutions you can implement today. Plus, you can download a free PDF checklist to track your progress and ensure nothing gets missed.

What a technical SEO audit covers

A technical SEO audit examines the backend infrastructure of your website to identify issues that prevent search engines from crawling, indexing, and ranking your pages effectively. This process focuses on site architecture, server performance, and code quality rather than content quality or keyword optimization. You'll analyze everything from XML sitemaps and robots.txt files to page speed and mobile responsiveness.

Core technical elements

The audit covers six main areas that directly impact your search visibility. Crawlability and indexability form the foundation, ensuring Google can access and understand your pages. You'll check for blocked resources, crawl errors, and indexing directives that might hide important content from search engines. Site architecture and URLs come next, evaluating your internal linking structure, URL formats, and navigation patterns.

Page speed and Core Web Vitals measure how quickly your site loads and responds to user interactions. These metrics include Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Search engines use these signals to determine user experience quality. Mobile usability ensures your site works properly on smartphones and tablets, which now account for over 60% of all searches.

On-page technical elements include title tags, meta descriptions, header tags, canonical tags, and structured data markup. These help search engines understand your content context and display rich results in search. Finally, redirects and site health identify broken links, redirect chains, duplicate content, and server errors that waste crawl budget and confuse both users and search engines.

A complete technical SEO audit checklist addresses the foundational issues that, when fixed, allow your content to reach its full ranking potential.

What it doesn't cover

Technical audits focus exclusively on site infrastructure and performance, not content strategy or off-site factors. You won't find recommendations about keyword research, content creation, backlink building, or competitive analysis in a technical audit. Those elements belong to content audits and link audits. Understanding this distinction helps you prioritize your SEO efforts and know when to conduct each type of audit for maximum impact.

Step 1. Define goals and set up tools

You need to establish clear objectives before diving into a technical SEO audit checklist. Without defined goals, you'll waste time fixing issues that don't impact your site's performance. Start by asking what you want to achieve: faster page loads, better mobile rankings, higher crawl efficiency, or fixing indexation problems. Your goals will determine which audit areas you prioritize and how you measure success.

Set specific audit objectives

Define measurable targets for your audit based on your site's current problems. If your organic traffic dropped recently, focus on indexability and crawl errors. If users complain about slow loading, prioritize speed optimization. For new sites, concentrate on ensuring search engines can discover and index your pages properly.

Document your baseline metrics before starting. Record your current page speed scores, mobile usability status, and the number of indexed pages in Google Search Console. This data helps you track improvements and justify the time you invest in technical fixes. You should also note specific pages that need attention, such as high-value landing pages or conversion-focused content.

Setting clear objectives transforms your technical audit from a checkbox exercise into a strategic improvement plan that directly impacts your search visibility.

Essential free tools to install

You need four core tools to conduct a complete technical audit. Google Search Console provides direct insight into how Google crawls and indexes your site. Set up your property by verifying ownership through HTML file upload, DNS record, or Google Tag Manager. This tool shows crawl errors, indexing issues, and mobile usability problems straight from Google's perspective.

Google PageSpeed Insights measures your site's loading performance and Core Web Vitals scores. You'll get separate reports for mobile and desktop, plus specific recommendations for fixing speed issues. The tool also shows real user data when your site has enough traffic.

Install the Screaming Frog SEO Spider free version to crawl up to 500 URLs. This desktop application discovers broken links, duplicate content, missing meta tags, and redirect chains. You can export data to spreadsheets for easier analysis and tracking. The free version handles most small to medium site audits without requiring a paid license.

Finally, add a browser extension like the SEO Meta in 1 Click extension for Chrome. This lets you quickly inspect meta tags, headings, canonical tags, and robots directives without viewing page source code. You'll spend less time checking individual pages and spot patterns in technical issues faster.

These free tools give you everything you need to complete a professional technical audit without spending money on expensive platforms. You can always upgrade later if your site grows beyond the free tool limits.

Step 2. Crawl your site and find errors

You need to simulate how search engine bots crawl your website to uncover hidden technical problems. A crawler visits every accessible page on your site, following internal links, checking server responses, and identifying issues that block search engines from properly indexing your content. This step reveals critical errors like broken pages, redirect loops, and blocked resources that you can't spot by manually browsing your site.

Run Screaming Frog on your site

Open Screaming Frog SEO Spider and enter your domain URL in the search bar at the top. Click "Start" and let the tool crawl your entire site. The free version handles up to 500 URLs, which covers most small business sites. You'll see real-time data populate across multiple tabs showing status codes, page titles, meta descriptions, and response times as the crawler works through your pages.

Wait for the crawl to complete before analyzing results. The tool displays a progress bar at the bottom showing how many URLs it has discovered and crawled. You can pause the crawl if needed, but completing the full scan gives you the most accurate picture of your site's technical health. Export the data to a spreadsheet by clicking "Export" in the top menu and selecting "All Internal HTML" to save a complete record.

Crawling your site with Screaming Frog reveals technical issues that remain invisible when you browse your site normally because you never encounter broken links or redirect chains as a visitor.

Check Google Search Console for crawl errors

Navigate to Google Search Console and open the "Pages" report under the Indexing section. This report shows you exactly which pages Google successfully indexed and which ones encountered problems. You'll see two main sections: "Why pages aren't indexed" lists specific error types, while the indexed pages count tells you how many URLs Google added to its search index.

Click on each error category to view the affected URLs. Common issues include "Discovered - currently not indexed," "Crawled - currently not indexed," and "Page with redirect." Google provides specific reasons for each error type, such as duplicate content, noindex tags, or canonicalization problems. You should also check the "Coverage" details to see if error counts increased recently, which might indicate a new technical problem.

Review the "Crawl Stats" report to understand how often Google visits your site and how long pages take to respond. Sudden drops in crawl rate or spikes in response time signal server problems that need immediate attention. You can filter this data by response type to separate successful crawls from errors and redirects.

Analyze the error report

Sort your crawl data by HTTP status code to identify patterns. Focus on these critical errors first: 404 responses indicate broken pages, 301 and 302 codes show redirects, 5xx errors reveal server problems, and blocked resources prevent Google from rendering your pages properly. You should prioritize fixing errors that affect your most important pages and highest-traffic URLs.

Create a spreadsheet with columns for URL, error type, priority level, and fix status. List each problem URL with its specific issue so you can track your progress as you resolve errors. High-priority items include broken internal links on your homepage, redirect chains longer than three hops, and any 5xx server errors that make pages completely inaccessible.

Check for duplicate content issues by comparing page titles and meta descriptions in your crawl data. Multiple pages with identical titles often indicate technical duplication problems rather than intentional similar content. You'll also find pages that lack basic SEO elements like title tags or meta descriptions, which you should add to your technical seo audit checklist for immediate fixes.

Step 3. Fix crawlability and indexability

Your site can't rank if search engines can't crawl or index your pages. Crawlability issues prevent bots from accessing your content, while indexability problems stop Google from adding your pages to search results. These two factors form the foundation of your technical seo audit checklist because they determine whether your content even gets a chance to rank. You need to identify and fix these barriers immediately.

Fix robots.txt blocking issues

Your robots.txt file controls which parts of your site search engines can access. Navigate to yoursite.com/robots.txt to view your current file. Look for "Disallow" directives that might block important pages or resources. Common mistakes include blocking your entire site with "Disallow: /", blocking CSS and JavaScript files that Google needs to render pages, or accidentally blocking your blog or product pages.

Remove any rules that block critical content or resources. Your robots.txt should allow access to pages you want indexed while blocking low-value sections like admin areas, search results, or duplicate content. Here's a basic example that works for most sites:

User-agent: *

Disallow: /admin/

Disallow: /cart/

Disallow: /search/

Allow: /

Sitemap: https://yoursite.com/sitemap.xml

Test your changes using the robots.txt tester in Google Search Console before deploying. This tool shows exactly what Googlebot sees and warns you if you accidentally block important resources. You can test specific URLs to confirm they're accessible.

Fixing robots.txt errors immediately restores search engine access to pages that were previously invisible, often resulting in quick indexing of previously blocked content.

Review and update meta robots tags

Check your page source code for meta robots tags that prevent indexing. Search for "noindex" in your HTML using browser developer tools or your crawl data from Screaming Frog. Pages with this tag won't appear in search results, even if they're technically accessible. You'll find these tags in the <head> section of your HTML:

<meta name="robots" content="noindex, nofollow">

Remove noindex tags from pages you want to rank. Keep them only on pages like thank you pages, internal search results, or duplicate content variations. Your most important pages should either have no robots meta tag (which defaults to "index, follow") or an explicit "index, follow" directive. Check your homepage, key landing pages, and top-performing content first.

Audit your canonical tags while reviewing meta tags. Each page should have a self-referencing canonical tag pointing to its own URL, unless it's a duplicate of another page. Incorrect canonicalization sends conflicting signals to search engines about which version to index. Your canonical tag should look like this:

<link rel="canonical" href="https://yoursite.com/page-url/">

Submit and validate your XML sitemap

Generate or update your XML sitemap to include all indexable pages. WordPress sites can use plugins like Yoast SEO or Rank Math to automatically create sitemaps. For other platforms, use online sitemap generators or create them manually. Your sitemap should list only pages you want indexed, excluding noindex pages, redirect URLs, or pages blocked by robots.txt.

Submit your sitemap through Google Search Console under the "Sitemaps" section. Enter your sitemap URL (usually yoursite.com/sitemap.xml) and click "Submit." Google will process your sitemap and report any errors. Check the "Coverage" report after a few days to see how many URLs Google successfully indexed from your sitemap.

Validate your sitemap structure using Google's specifications. Each URL entry should include the page location and optionally the last modified date. Keep individual sitemap files under 50,000 URLs and 50MB uncompressed. For larger sites, create a sitemap index file that references multiple sitemaps. Fix any validation errors before resubmitting to ensure Google can process your sitemap correctly.

Step 4. Optimize site architecture and URLs

Your site architecture determines how search engines understand and prioritize your content. A clear hierarchy with logical URL patterns helps both users and crawlers navigate your site efficiently. Poor architecture creates orphaned pages, wastes crawl budget, and confuses search engines about which pages matter most. You'll examine your URL structure, internal linking, and content organization to ensure every important page gets discovered and properly indexed.

Check your URL structure

Review your URLs for consistency and readability. Clean URLs should describe page content using simple words separated by hyphens, not underscores or random parameters. Your technical seo audit checklist should flag URLs longer than 75 characters, those containing special characters, or any using dynamic parameters like "?id=123" when a descriptive path would work better. Each URL should follow a consistent pattern that reflects your site hierarchy.

Look for these common URL problems in your crawl data:

- Uppercase letters mixed with lowercase (URLs are case-sensitive)

- Duplicate URLs with trailing slashes vs. without (site.com/page vs. site.com/page/)

- Session IDs or tracking parameters in URLs

- Multiple ways to access the same page

- Non-descriptive URLs like "/product1234" instead of "/blue-running-shoes"

Fix these issues by implementing 301 redirects from old URLs to clean versions. Your redirect map should point all variations to a single canonical URL. For WordPress sites, adjust your permalink settings to use post names instead of date-based or numeric structures. Other platforms require .htaccess rules or server-side redirects to enforce clean URL patterns.

Clean, descriptive URLs not only help search engines understand your content but also increase click-through rates because users trust readable links more than cryptic strings.

Review internal linking patterns

Analyze how your pages connect to each other through internal links. Every page should be reachable within three clicks from your homepage, with your most important content receiving the most internal links. Check your crawl data for orphaned pages that have zero internal links pointing to them. These pages stay invisible to search engines unless you add them to your sitemap or create linking pathways.

Build a logical linking structure that groups related content together. Your main navigation should link to top-level categories, which then link to subcategories and individual pages. Add contextual links within your content that connect related topics using descriptive anchor text. Avoid generic phrases like "click here" and instead use keywords that describe the linked page's content.

Count the outbound links on each page to prevent link dilution. Pages with hundreds of links pass minimal value to each destination, while focused pages with 5-10 strategic links provide stronger signals. Your homepage should prioritize linking to your most valuable pages rather than cluttering navigation with every possible destination.

Step 5. Improve speed and core web vitals

Page speed directly impacts your search rankings and conversion rates. Google confirmed that Core Web Vitals became a ranking factor in 2021, making these metrics essential to your technical seo audit checklist. Slow pages frustrate users and waste your crawl budget because search engines spend less time on sites that respond slowly. You need to measure three specific metrics: Largest Contentful Paint (LCP), First Input Delay or Interaction to Next Paint (FID/INP), and Cumulative Layout Shift (CLS).

Measure your current Core Web Vitals scores

Test your site using PageSpeed Insights at https://pagespeed.web.dev/. Enter each of your important URLs and check both mobile and desktop scores separately. The tool shows your field data from real users when available, plus lab data from simulated tests. Focus on the field data first since it reflects actual user experience. Record your baseline scores for LCP, FID/INP, and CLS before making changes.

Check your Core Web Vitals report in Google Search Console for a broader view. This report groups your URLs by performance status: Good, Needs Improvement, or Poor. You'll see which page types cause problems most often. Click into each group to identify patterns like all blog posts loading slowly or specific categories having layout shift issues.

Fix Largest Contentful Paint (LCP)

Largest Contentful Paint measures how long your largest visible element takes to load, which should stay under 2.5 seconds. This metric typically involves your hero image, heading, or main content block. Slow LCP usually results from oversized images, render-blocking resources, or slow server response times. You fix this by optimizing these specific elements.

Compress your images using modern formats like WebP or AVIF instead of JPEG or PNG. Your images should be sized appropriately for their display container, not scaled down by CSS. Add explicit width and height attributes to your image tags to prevent layout shifts:

<img src="hero-image.webp" alt="Description" width="1200" height="600" loading="lazy">

Implement lazy loading for below-the-fold images using the native loading attribute. Your above-the-fold images should load immediately without lazy loading to ensure fast LCP. Preload critical resources like fonts and hero images in your HTML head:

<link rel="preload" as="image" href="hero-image.webp">

<link rel="preload" as="font" href="font.woff2" type="font/woff2" crossorigin>

Fixing LCP often produces the most dramatic speed improvements because it directly addresses the slowest-loading element that users actually see and wait for.

Optimize First Input Delay and Interaction to Next Paint

First Input Delay measures how quickly your page responds to user interactions like clicks or taps. Google replaced FID with Interaction to Next Paint (INP) in 2024, which tracks responsiveness throughout the entire page visit. You should keep INP under 200 milliseconds by reducing JavaScript execution time and eliminating long tasks that block the main thread.

Minimize your JavaScript bundles by removing unused code and splitting large files into smaller chunks. Defer non-critical scripts using the async or defer attributes:

<script src="analytics.js" defer></script>

<script src="ads.js" async></script>

Move your render-blocking CSS inline for above-the-fold content and load the rest asynchronously. This prevents stylesheets from delaying page interactivity while maintaining visual consistency.

Reduce Cumulative Layout Shift (CLS)

Cumulative Layout Shift tracks unexpected movement of page elements as your page loads. Keep CLS below 0.1 by reserving space for images, ads, and dynamic content before they load. Unstable layouts frustrate users and cause accidental clicks on wrong elements.

Set explicit dimensions for all images, videos, and ad slots in your HTML or CSS. This prevents content from jumping when media loads. For dynamic content like ads, use min-height in CSS to maintain layout stability:

.ad-container {

min-height: 250px;

width: 300px;

}

Avoid inserting content above existing content unless it happens in response to user interaction. Your font loading strategy should use font-display: swap or font-display: optional to prevent invisible text that causes layout shifts when fonts finally load.

Step 6. Check mobile usability and rendering

Mobile devices account for over 60% of all searches, making mobile optimization critical to your technical seo audit checklist. Google uses mobile-first indexing, which means the search engine primarily crawls and indexes the mobile version of your site rather than desktop. Your site must render properly on smartphones and tablets, with readable text, accessible buttons, and no horizontal scrolling. Even minor mobile issues can hurt your rankings and drive users away before they engage with your content.

Test mobile-friendliness with Google tools

Run your site through the Mobile-Friendly Test at https://search.google.com/test/mobile-friendly. Enter your URL and wait for Google to analyze your page. The tool shows exactly what Googlebot sees on mobile devices and flags problems like text too small to read, clickable elements too close together, or viewport not set correctly. Test your homepage, key landing pages, and several blog posts to identify patterns across different page types.

Check the Mobile Usability report in Google Search Console for a comprehensive view of mobile issues across your entire site. Navigate to Experience > Mobile Usability to see which pages have problems. Common errors include content wider than screen, text too small, and clickable elements too close. Click into each error type to view the affected URLs and prioritize fixes based on page importance and traffic volume.

Google's mobile-first index means your mobile version determines your rankings even for desktop searches, making mobile optimization non-negotiable for search visibility.

Check for mobile rendering issues

Inspect how your site renders JavaScript and CSS on mobile using the URL Inspection tool in Google Search Console. Enter a URL and click "Test Live URL," then select "View Tested Page" to see the rendered screenshot. Compare this against what you see in your mobile browser. Differences indicate rendering problems where resources fail to load or JavaScript doesn't execute properly on Google's mobile crawler.

Look for missing images, broken layouts, or content that appears on desktop but disappears on mobile. Your viewport meta tag should be present in your HTML head to ensure proper scaling:

<meta name="viewport" content="width=device-width, initial-scale=1">

Test your site on actual mobile devices using different browsers. Android Chrome and iOS Safari handle rendering differently, so check both platforms if possible. Your font sizes should be at least 16px for body text to prevent zoom requirements. Buttons and tap targets need at least 48x48 pixels of space to avoid accidental clicks.

Fix common mobile usability problems

Increase spacing between clickable elements to at least 8 pixels of separation. Mobile users tap with fingers, not precise mouse cursors, so cramped navigation menus and closely stacked links create frustration. Your navigation should either use a hamburger menu or space items vertically with generous padding.

Remove or resize horizontal scrolling elements that force users to swipe sideways to view content. Tables often cause this problem on mobile. Convert wide tables to responsive card layouts or allow vertical scrolling within a container. Your images should have max-width: 100% in CSS to prevent overflow:

img {

max-width: 100%;

height: auto;

}

Eliminate pop-ups and interstitials that cover the main content on mobile. Google penalizes intrusive interstitials that block access to content immediately after arriving from search results. Your newsletter signups, cookie notices, and promotional banners should be dismissible and take up minimal screen space on mobile devices.

Step 7. Audit on page elements and schema

Your on-page elements communicate directly with search engines about your content's topic and structure. These HTML tags tell Google what each page covers, how it relates to other content, and which results should display rich snippets. Missing or poorly optimized on-page elements confuse search engines and reduce your click-through rates, even when you rank well. You'll examine title tags, meta descriptions, header structure, and structured data to ensure every page sends clear, accurate signals.

Review title tags and meta descriptions

Check every page for unique, descriptive title tags using your crawl data from Screaming Frog. Sort by title length and identify pages with titles shorter than 30 characters or longer than 60 characters. Short titles waste valuable space in search results, while long titles get truncated and lose impact. Your title should include your target keyword near the beginning and accurately describe the page content without keyword stuffing.

Look for duplicate title tags across multiple pages. Your technical seo audit checklist should flag any identical titles because they make pages compete against each other in search results. Export your title tag data and use spreadsheet functions to identify duplicates. Each page needs a unique value proposition in its title that differentiates it from similar pages on your site.

Audit your meta descriptions for length and uniqueness using the same process. Descriptions should stay between 120 and 155 characters to display fully in most search results. Pages without meta descriptions let Google generate snippets automatically, which often produces less compelling text. Write descriptions that include your keyword naturally and create a clear call to action that encourages clicks.

Check header tag hierarchy

Examine your header structure to ensure proper semantic organization. Each page should have exactly one H1 tag that describes the main topic. Your H2 tags break content into major sections, while H3 through H6 tags create subsections within those divisions. Search engines use this hierarchy to understand your content organization and topic relationships.

Find pages with missing H1 tags or multiple H1s using your crawler. View the page source code to confirm your H1 contains your primary keyword and matches user intent. Your headers should follow numerical order without skipping levels, like jumping from H2 directly to H4. Proper hierarchy helps both search engines and screen readers navigate your content logically.

Structured header tags create a content outline that search engines use to identify the main topics and subtopics covered on each page.

Validate schema markup implementation

Test your structured data using Google's Rich Results Test at search.google.com/test/rich-results. Enter your URL and check for implementation errors or warnings. Your schema markup should use JSON-LD format in your page's <head> section rather than microdata or RDFa formats. JSON-LD offers cleaner implementation and easier maintenance:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Your Article Title",

"author": {

"@type": "Person",

"name": "Author Name"

},

"datePublished": "2025-01-15",

"dateModified": "2025-01-20"

}

</script>

Add appropriate schema types for your content including Article, Product, LocalBusiness, FAQ, or HowTo depending on your page type. Each schema implementation should include all required properties plus recommended properties when data exists. Use nested schemas to provide additional context about organizations, authors, and related entities.

Fix canonical tag issues

Verify every page includes a self-referencing canonical tag pointing to its own URL. Check your crawl data for pages missing canonical tags or those pointing to different URLs. Your canonical should use absolute URLs with your preferred protocol (HTTPS) and domain version (www or non-www). The tag belongs in your HTML <head>:

<link rel="canonical" href="https://www.yoursite.com/page-url/">

Identify pages with conflicting canonicalization signals where the canonical tag points to one URL but redirects send users to another. These mixed signals confuse search engines about which version to index. Your canonical tags should match your redirect destinations and preferred URL patterns consistently across your entire site.

Step 8. Clean up content, links, and redirects

Your internal links, redirects, and duplicate content directly affect how search engines distribute authority across your site. Broken links waste crawl budget and create dead ends for both users and bots. Redirect chains slow page loading and dilute link equity with each hop. Duplicate content confuses search engines about which version to rank. This cleanup step removes friction from your site architecture and ensures every page contributes positively to your overall search performance.

Find and fix broken internal links

Scan your crawl data for 404 errors and broken links that prevent users from reaching content. Sort by response code in Screaming Frog and export all URLs returning 404 status. Your broken links fall into two categories: pages that were deleted or moved, and pages with typos in their URLs. You need different fixes for each type depending on whether the destination page still exists in another form.

Fix each broken link using this process:

- Identify the link source by checking which pages link to the broken URL

- Determine if a replacement page exists with similar content

- Update the link to point directly to the correct destination

- Remove the link entirely if no suitable replacement exists

Your homepage and navigation menus should never contain broken links since they appear on every page. Check these high-visibility areas first in your technical seo audit checklist before moving to internal content links. You can also set up 301 redirects from broken URLs to relevant replacement pages rather than updating every link manually, but direct links always perform better than redirects.

Fixing broken links immediately improves user experience and prevents search engines from wasting crawl budget on pages that don't exist.

Audit and optimize redirects

Review your redirect chains to eliminate unnecessary hops between URLs. A redirect chain occurs when URL A redirects to URL B, which redirects to URL C. Each redirect adds latency and reduces the link equity that passes through. Your redirects should point directly from the old URL to the final destination in a single hop.

Check your crawl data for redirect status codes (301, 302, 307, 308). Export these URLs and test each redirect path manually or use your crawler's redirect chain report. Update your redirects to skip intermediate steps:

Before:

example.com/old-page → example.com/temp-page → example.com/final-page

After:

example.com/old-page → example.com/final-page

example.com/temp-page → example.com/final-page

Replace temporary 302 redirects with permanent 301 redirects unless you genuinely plan to restore the original URL. Search engines treat 302s as temporary and may not transfer full ranking signals to the destination page. Your redirect code should look like this in your .htaccess file for Apache servers:

Redirect 301 /old-page/ https://example.com/final-page/

Remove or consolidate duplicate content

Identify duplicate pages that target the same keywords and serve the same purpose. Use your crawl data to find pages with identical or very similar title tags, meta descriptions, or content. Common duplicate content issues include printer-friendly versions, session ID parameters, and multiple URLs accessing the same content through different paths.

Decide whether to consolidate, redirect, or canonicalize each duplicate based on its traffic and backlinks. Pages with significant organic traffic or quality backlinks deserve 301 redirects to preserve their value. Low-value duplicates can simply use canonical tags pointing to your preferred version. Delete pages that serve no purpose and have zero external value, then implement redirects to relevant alternatives.

Free technical SEO audit checklist PDF

You need a structured way to track your progress through all 18 audit steps without missing critical items. Our free downloadable PDF checklist provides a printable reference that covers every technical element discussed in this guide. You can check off completed tasks, note issues that need attention, and create a permanent record of your audit findings. The checklist organizes tasks by priority level so you tackle the most impactful issues first.

Download and use the checklist

The PDF includes 18 main audit steps with sub-tasks listed under each section. You'll find space to record your baseline metrics, note problem URLs, and track completion dates for every fix. Each checklist item links back to the corresponding section in this guide for quick reference when you need implementation details.

Print the checklist and keep it beside your computer while conducting your audit. Mark items as you complete them using this simple system:

- Not started: Leave blank

- In progress: Add a dash (-)

- Completed: Add a checkmark (✓)

- Needs help: Add an asterisk (*)

Using a physical checklist prevents you from skipping steps or forgetting to revisit problematic areas that need ongoing attention after your initial fixes.

Your technical seo audit checklist becomes a valuable document for tracking improvements over time. Save completed checklists to compare against future audits and measure how your fixes impacted performance metrics like crawl efficiency, page speed scores, and indexed page counts.

Final thoughts

Your technical seo audit checklist gives you everything needed to identify and fix the backend issues that hold your rankings back. These 18 steps address the foundation that makes all your other SEO efforts possible. You can't rank content that search engines can't crawl, index, or understand. Fixing technical problems first creates the conditions for sustainable organic growth across your entire site.

Run through this checklist quarterly to catch new issues before they damage your traffic. Your site changes constantly with new content, updates, and feature additions. Each change introduces potential technical problems that need monitoring. Regular audits keep your technical foundation strong as your site grows.

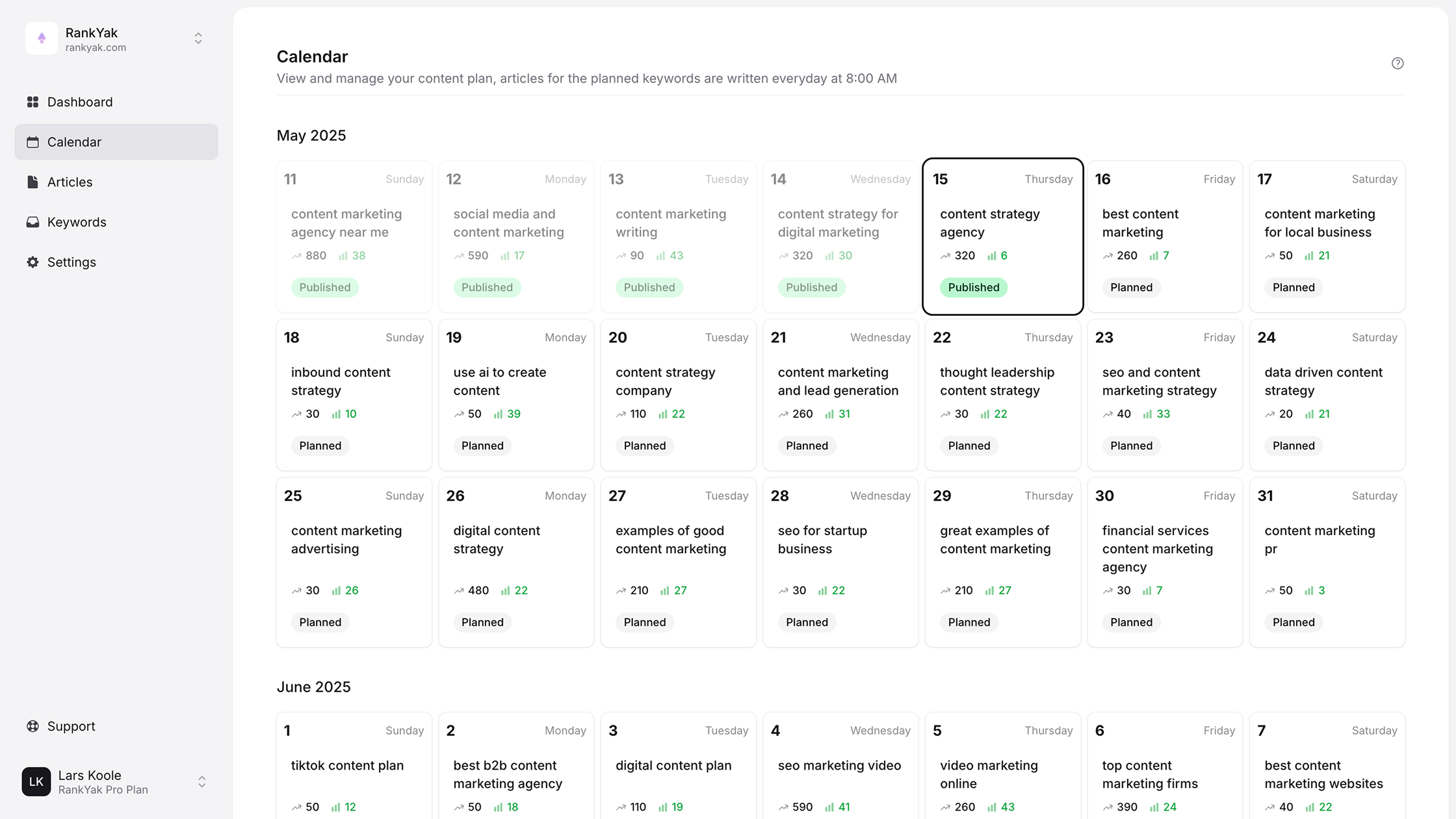

Manual technical audits take significant time and expertise to complete properly. RankYak automates your entire SEO workflow, from keyword research through content creation and publishing, while monitoring technical health automatically. You get daily optimized content that ranks without spending hours on manual audits and fixes.

Get Google and ChatGPT traffic on autopilot.

Start today and generate your first article within 15 minutes.