What Is SEO Automation? Tasks, Tools, Benefits, Challenges

SEO automation means using software and AI to take on the repetitive parts of search engine optimization so you don’t have to. Instead of manually pulling Search Console reports, checking rankings, clustering keywords, drafting content briefs, fixing obvious on-page issues, or hunting for internal link opportunities, tools can do that work on a schedule and hand you clean outputs. It doesn’t replace strategy, subject-matter expertise, or editorial judgment; it frees them up. Done right, automation shortens cycle time from idea to publish, improves consistency, and reduces human error across your SEO operations.

This guide explains exactly how to make that happen. You’ll learn why automation matters now, who benefits (and when it’s overkill), and how modern workflows combine data connectors, crawlers, and LLMs. We’ll map the tasks you can automate today versus what should stay human-led, highlight tool categories with examples, and compare all‑in‑one platforms to point solutions. You’ll get an implementation plan for your first 30 days, the KPIs to track, common risks and governance practices, plus costs and ROI math. By the end, you’ll know where to start and what to automate first. Let’s get specific.

Why SEO automation matters now

Two shifts made SEO automation a priority: the rise of LLM‑powered tools and the explosion of always‑on data. Modern platforms can now analyze SERPs, draft content outlines, and spot technical issues in minutes, not days. Pair that with nonstop signals from Search Console, GA4, and your crawler, and it’s clear that manual workflows can’t keep up with the cadence today’s rankings demand.

Automation turns that overload into leverage. Scheduled audits catch broken links and regressions before they cost traffic. Auto‑refreshing Looker Studio dashboards remove weekly reporting overhead. Rank trackers and AI Overviews monitors alert you to visibility changes. Agent builders (like Gumloop or n8n) combine scraping, enrichment, and LLMs to generate briefs, cluster keywords, and propose internal links—so humans focus on strategy and edits, not busywork.

The payoff is practical: time savings, cleaner data, faster cycle times, and more consistent publishing—all drivers of better ROI. With competitors scaling content operations and search expanding into chat surfaces, teams that automate the repeatable parts of SEO will out‑ship and out‑learn those that don’t. Now is the moment to systematize the work and protect your calendar for high‑impact decisions.

Who SEO automation is for (and when it’s overkill)

If your week is clogged with pulling reports, wrangling keywords, and pushing the same CMS buttons, you’re the target user for SEO automation. It’s built for lean teams that need consistent publishing, faster feedback loops, and fewer manual errors—without hiring a platoon. Automation shines when volume and cadence matter more than bespoke, one‑off tasks.

Who benefits most

Teams that match these patterns tend to see outsized gains. Use this as a quick fit check before you invest.

- Lean teams with big roadmaps: Solo founders, SMBs, and small content teams that must ship daily or weekly.

- Agencies and multi‑site operators: Repeatable workflows across clients, geos, or product lines.

- Content‑led businesses and publishers: High post velocity where briefs, clustering, and interlinking compound.

- Ecommerce and catalogs: Large SKU sets needing templated on‑page fixes, audits, and internal links.

- Data‑driven marketers: Comfortable letting dashboards and alerts replace manual monitoring.

When automation is overkill

Some scenarios still reward a mostly manual approach. If you’re here, start smaller, then layer automation later.

- Early‑stage or tiny sites: A handful of pages and rare updates—manual is faster and clearer.

- Heavy SME or compliance needs: Regulated niches and thought leadership that demand deep human review.

- Undefined brand voice/process: No style guide, workflow, or QA? Automation will amplify inconsistency.

- One‑off campaigns or experiments: Short‑lived pages don’t justify building automations yet.

How SEO automation works (workflows, data, and LLMs)

At a practical level, SEO automation stitches together data sources, schedulers, and AI to run repeatable workflows. Think “triggers + data + logic + outputs.” Orchestration layers (e.g., agent builders like Gumloop or n8n) connect Search Console, crawlers, and rank trackers to LLMs that analyze or draft, then hand clean deliverables to your CMS, docs, or dashboards.

The building blocks

Automation stacks usually include a few modular pieces you can swap in and out as you scale.

- Data inputs: Google Search Console, GA4, site crawlers (e.g., Screaming Frog SEO Spider), SERP scrapers, backlink exports.

- Orchestration: No/low‑code flow builders (e.g., Gumloop, n8n) plus schedulers and webhooks.

- AI/LLMs: ChatGPT or similar models for clustering, briefs, rewrites, and summarization.

- Outputs: CMS drafts, Google Docs, Google Sheets, and auto‑updating Looker Studio dashboards.

A simple end‑to‑end workflow

Here’s a common “idea to publish” loop many teams start with and improve over time.

- Schedule weekly trigger.

- Pull top queries and pages from Search Console.

- Cluster queries; scrape current SERPs for each cluster.

- Use an LLM to generate a content brief and on‑page recommendations.

- Create a draft in your CMS or a Google Doc with internal link suggestions.

- Update reporting in Looker Studio and notify the editor for review.

Flow: GSC → Cluster → SERP scrape → LLM brief → CMS draft → Dashboard/alert

Where LLMs add value (and guardrails)

LLMs excel at classification (intent), summarizing SERPs, drafting outlines, proposing interlinks, and polishing titles/descriptions. Keep numbers and KPIs sourced from systems of record (GSC/GA4) to avoid hallucinations, and add approval gates before site changes go live (many on‑page tools support human approval). That combination—deterministic data plus AI assistance—delivers speed without sacrificing control.

Core SEO tasks you can automate today

You don’t have to automate everything to feel the lift. Start with frequent, low‑judgment work where software is faster and more accurate than a person. The goal is simple: keep humans on strategy and editing while automation handles collection, classification, and first drafts.

- Reporting dashboards: Auto‑pull Google Search Console and GA4 into Looker Studio; refresh on schedule and email summaries.

- Rank tracking and AI Overviews alerts: Monitor positions and surface changes automatically with a rank tracker; route alerts to Slack/email.

- Recurring site audits: Schedule crawls (e.g., Screaming Frog SEO Spider) to export broken links, missing tags, and slow pages.

- Keyword discovery and clustering: Batch group queries and map them to pages with a keyword tool or a simple LLM workflow.

- SERP‑to‑brief generation: Scrape top results, extract common questions, and have an LLM draft a one‑page brief for editors.

- On‑page recommendations at scale: Use optimization tools (e.g., Page Optimizer Pro or Yoast) to suggest titles, headings, and schema with human approval.

- Internal linking suggestions: Combine a fresh crawl with your content index to auto‑propose links when new posts go live.

- Content decay detection: Flag pages losing clicks by comparing GSC periods or using a content decay feature to queue refreshes.

- CMS drafting/publishing: Create drafts and push approved updates to WordPress, Webflow, or Shopify via APIs/webhooks.

Automate these and you’ll cut hours of busywork each week while improving consistency. Next, we’ll draw the line on which SEO tasks should stay human‑led or only partially automated—and why that guardrail protects quality and trust.

SEO tasks to keep human-led (or only partially automate)

Automation is a force multiplier, but it shouldn’t drive the bus. Some parts of SEO depend on judgment, lived experience, and brand nuance. Use tools to collect data, summarize, and draft, then put humans in charge of choices and final outputs. As several experts note, it’s unwise to automate the entire process—especially the bits that earn trust.

- Strategy and prioritization: Define goals, choose battles, and sequence work based on business context—not just scores in a tool.

- Keyword selection (not just analysis): Let automation surface volumes and clusters; have humans pick targets and map intent to pages.

- SME/thought leadership content: First‑hand experience, original angles, and defensible claims should be written or heavily edited by experts.

- Brand voice and editorial standards: Headlines, tone, examples, and CTAs need a human editor to keep consistency and credibility.

- Compliance and YMYL review: Regulated topics and sensitive advice require legal/SME sign‑off, even if an outline came from an LLM.

- High‑impact technical changes: Architecture, redirects, and indexation rules should pass human QA before deployment.

- Outreach and relationships: AI can draft notes, but personalization, negotiation, and partnership judgment stay human.

Keep these human‑led, and let automation handle the heavy lifting around data prep, briefs, and monitoring.

Benefits of SEO automation you can quantify

Automation isn’t just “faster”; you can measure the lift. Establish a 30–90 day baseline for your current workflow, flip on automation, and compare. The biggest wins show up as reclaimed hours, shorter cycle times, steadier publishing, and earlier fixes that protect traffic—all traceable in your reporting stack.

- Hours saved and cost impact: Track weekly time on reporting, audits, briefs.

Monthly savings = hours_saved_per_month * hourly_rate.Payback (months) = tool_cost / (hours_saved_per_month * hourly_rate). - Cycle time to publish: Measure average days from keyword selection to published. Aim for a step‑change post‑automation.

- Publishing consistency: Posts shipped per week and percent on schedule.

Content velocity = published_posts / month. - Issue detection and fix latency: Mean time to detect and remediate broken links, missing tags, or slow pages from scheduled crawls.

- Rank and visibility responsiveness: Time from alert to action in your rank tracker/AI Overviews monitor; net position change on tracked terms.

- Traffic and conversions from faster iteration: Compare GSC/analytics before vs. after.

Incremental clicks = post_automation_clicks - baseline_clicks. - Quality/error reduction: Count 4xx/5xx, duplicate titles, and on‑page optimization scores from your auditing/optimization tools pre vs. post.

- Cost avoidance: Contrast tool spend with freelancers or added headcount you no longer need for repetitive tasks.

Document these in a Looker Studio view so stakeholders see the operational and revenue impacts alongside cost and payback.

Challenges and risks to plan for

Automation amplifies whatever you feed it—good or bad. The biggest pitfalls are accuracy, oversight, and overreach: letting LLMs fabricate facts, pushing unreviewed changes to production, or trying to automate judgment-heavy work. Add in security and vendor risk, and you need clear guardrails before you scale.

- AI hallucinations and bad data: Never let models generate KPIs. Pull numbers from systems of record (Search Console, GA4), require sources in prompts, and store provenance alongside outputs.

- Quality and brand drift: Enforce a style guide, run human edits on all public copy, and spot‑check samples weekly for tone, claims, and intent match.

- Unapproved CMS changes: Use staging, approval queues, and versioning. Gate live edits behind human review and keep rollback paths ready.

- Lock‑in and data loss: Prefer tools with exports and APIs. Keep a canonical keyword/content inventory in your own sheet or database.

- Reliability and rate limits: Add retry logic, timeouts, and alerts on failed runs so silent errors don’t pile up.

- Security and privacy: Grant least‑privilege access (especially to GSC/analytics), avoid sending PII to third‑party models, and review vendors’ data policies.

- TOS and scraping risk: Respect robots and platform terms; use official APIs where possible to avoid blocks and compliance issues.

- Reporting drift: Validate calculations in automated reports and reconcile against native dashboards monthly.

Tool categories for SEO automation (with examples)

Most teams assemble a stack across a few clear categories. Use this as a menu: pick one tool per job, then wire them into simple flows. The goal is to automate collection, analysis, and first drafts—while keeping humans on strategy and approvals.

- Orchestration/AI agents: Build no‑code flows that scrape, enrich, and prompt LLMs. Examples: Gumloop (AI agents for SEO workflows), n8n (self‑hosted automation).

- Crawlers and site audits: Schedule scans to find broken links, slow pages, and missing tags. Examples: Screaming Frog SEO Spider, Sitebulb.

- Rank tracking and SERP monitoring: Track positions and alert on changes, including AI Overviews. Examples: SE Ranking (adds AI Overviews tracking).

- Keyword discovery and clustering: Surface opportunities and group queries by intent. Examples: LowFruits (SERP analysis/clustering), Semrush/Ahrefs (research suites).

- Content brief/optimization assistants: Turn SERPs into briefs and on‑page recommendations. Examples: Page Optimizer Pro (AI‑guided on‑page), Clearscope (content decay detection and optimization), Yoast SEO (on‑page checks in CMS).

- Content generation (editor‑assisted): Speed up outlines and drafts for human edit. Examples: ChatGPT (research and headings), Surfer AI (AI‑assisted writing with optimizer).

- CMS automation and implementation: Push approved changes at scale with safeguards. Examples: Alli AI (CMS‑level on‑page changes with approval).

- Reporting and BI: Auto‑refresh performance views for stakeholders. Examples: Looker Studio (dashboards using GSC/GA4).

- GSC intelligence and anomaly detection: Mine Search Console for issues and opportunities. Examples: SEO Stack (GSC‑driven analytics and projections).

- Design/landing page acceleration: Generate wireframes/components to ship SEO pages faster. Examples: Relume (AI‑assisted sitemaps and page layouts).

Start with one reliable tool in each category you’ll use weekly, then connect them into a single “idea → brief → draft → publish → report” loop.

All-in-one platforms vs point solutions

You can automate SEO two ways: consolidate on an all‑in‑one that covers the idea → brief → draft → publish → report loop, or assemble best‑of‑breed point tools for each job. All‑in‑ones trade flexibility for speed to value and simpler governance; point solutions maximize depth and control but increase tool sprawl, integration work, and QA overhead. Your choice hinges on team size, compliance needs, and how opinionated your workflows are.

When to choose an all‑in‑one

- Lean team, big cadence: You need daily outputs without hiring more people.

- Single pane of glass: Unified workflows, approvals, and reporting in one place.

- Predictable cost: One subscription beats stacking several licenses.

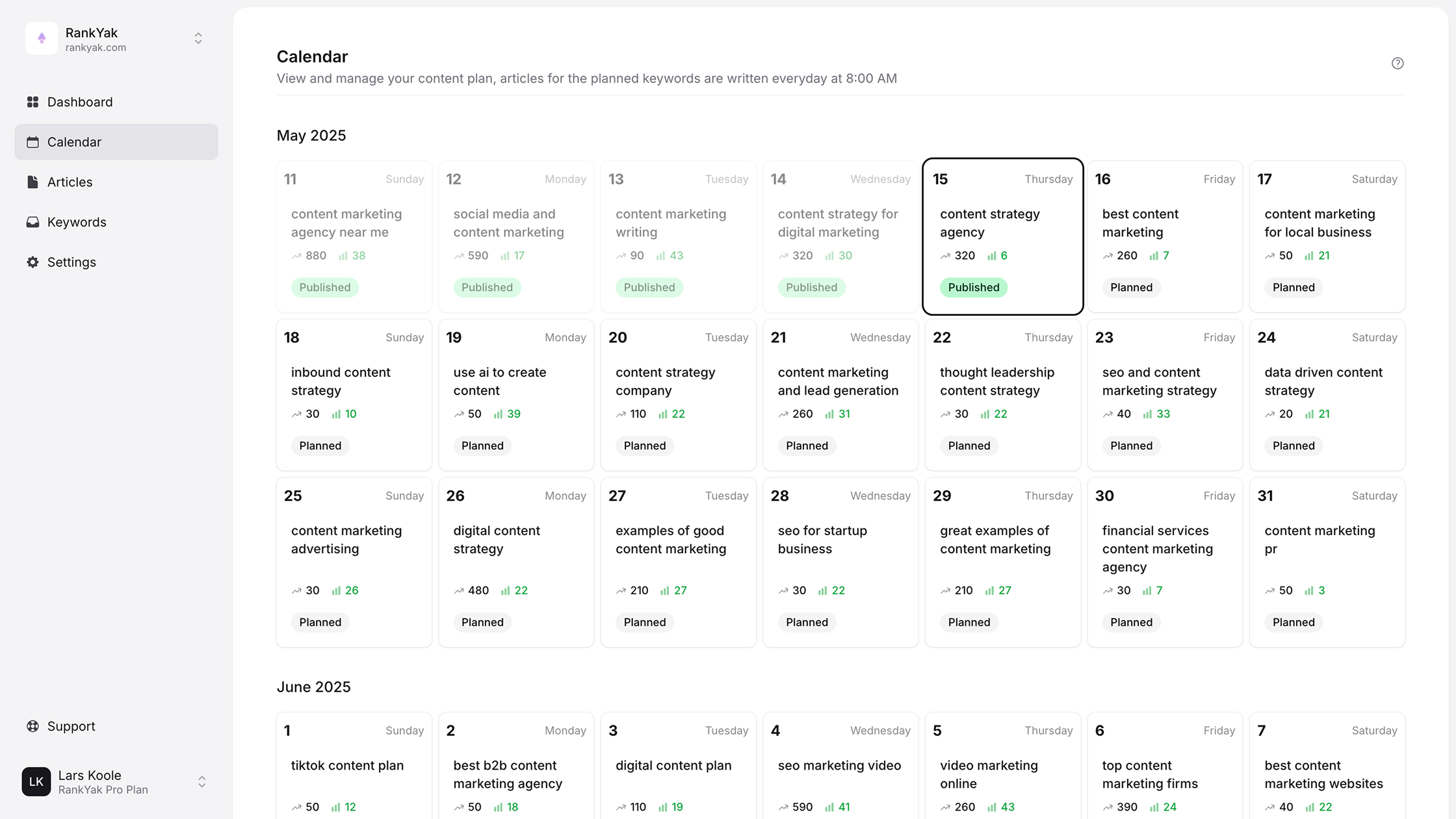

- Built‑in loop: Keyword discovery, content plan, drafting, auto‑publishing, and backlinks in one flow (e.g., RankYak covers this end‑to‑end).

When to choose point solutions

- Specialist depth required: You need advanced rank tracking, content decay, or on‑page analysis beyond a suite’s defaults.

- Custom or self‑hosted flows: Prefer n8n‑style control, scripts, or internal policies.

- Complex orgs and compliance: Separate tools, separate permissions, and stricter change control.

- Tooling already in place: Keep Clearscope for optimization, SE Ranking for tracking, a crawler for audits, and wire them with an agent builder.

Most teams end up hybrid: run a default all‑in‑one loop, then augment with a few deep point tools where it moves the needle.

Implementation roadmap: how to start automating SEO in 30 days

Keep the scope tight: one site, one content type, one primary KPI. Your goal in 30 days is to replace manual reporting, audits, and briefs with reliable automations, then ship at least one “automation‑assisted” article end‑to‑end. Build guardrails first, then speed. Treat everything as a repeatable flow you’ll refine weekly, not a one‑time project.

-

Week 1 — Baseline and plumbing: Define KPIs and capture a manual‑time baseline. Connect Google Search Console and GA4. Stand up a Looker Studio dashboard. Run a full crawl (e.g., Screaming Frog) and export issues. Create a central Google Sheet as your

keyword → pagesource of truth. -

Week 2 — Monitoring on autopilot: Schedule weekly crawls and exports. Add rank tracking and alerts (e.g., SE Ranking). Wire a simple agent/workflow (Gumloop or n8n): GSC → Sheets → anomaly check → Slack/email. Validate every alert against native dashboards.

-

Week 3 — Briefs and on‑page at scale: Build a SERP‑to‑brief flow: cluster terms (e.g., LowFruits or prompts), scrape top results, generate a one‑page brief with headings, FAQs, and internal link ideas. Use an optimizer (e.g., Page Optimizer Pro/Yoast) for recommendations with human approval.

-

Week 4 — Draft, publish, harden: Produce one article via the new brief workflow; push a CMS draft via API/webhook if available; add internal links. Close the loop in dashboards. Add QA checklists, access controls, retries, and a rollback plan. Document the runbook and next automations to tackle.

Metrics and KPIs to measure impact

Treat automation like any other performance program: baseline first, then track a tight set of KPIs in a single dashboard. Pair operational metrics (time, throughput, latency) with outcome metrics (rank, traffic, conversions). Pull numbers from systems of record—Search Console, GA4, your crawler, and rank tracker—and annotate when new automations go live so cause and effect are visible.

| KPI | How to calculate | Source | Cadence |

|---|---|---|---|

| Time saved (hrs/mo) | Σ (baseline_manual_time - automated_time) by task |

Time tracking/run logs | Monthly |

| Cycle time to publish (days) | publish_date - keyword_commit_date |

CMS + content tracker | Weekly |

| Content velocity & on‑time rate | posts_published/mo and on_time_posts / planned_posts |

CMS/planning sheet | Weekly |

| Crawl health & MTTR | open_issues, issues_closed/mo, mean_time_to_remediate |

Screaming Frog/Site audit | Weekly |

| Rank distribution & AIO visibility | % Top 3/10, AI Overviews presence on tracked terms |

Rank tracker | Weekly |

| Organic clicks, CTR, conversions | clicks, impressions, CTR, sessions → conversions |

GSC + GA4 | Weekly |

| Quality guardrails | editorial_edits/1k words, fact_corrections/draft |

Editorial QA log | Monthly |

Set targets and alert thresholds (e.g., CTR drops >15%, MTTR >7 days). Compare 30‑day pre‑automation to 30/60/90‑day post‑automation windows. Roll everything into Looker Studio so stakeholders see ops gains and revenue impact together. For finance, keep a simple view: ROI% = (incremental_profit - tool_cost) / tool_cost * 100 and Payback_months = tool_cost / (hours_saved * hourly_rate).

Governance, quality, and compliance best practices

Good SEO automation runs on rails: clear rules for what the system can change, who approves it, and how quality is verified. Write these rules down, wire them into your workflows, and measure adherence. Treat every flow as a controlled process that moves through draft → review → approve → publish with traceable inputs, sources, and owners. That’s how you scale speed without sacrificing trust.

- Define scope and policy: List tasks that may run unattended vs. those that require human approval.

- RACI and approvals: Assign owners/reviewers; block production edits unless an approver signs off.

- Style and sourcing rules: Enforce a style guide; ban LLM‑generated metrics; require cited sources for facts.

- QA gates and scorecards: Use

acceptance_criteria.mdper template; track edits per 1k words and fact fixes. - Staging, versioning, rollback: Test changes off‑site; use CMS revisions; keep a documented rollback plan.

- Audit logs and runbooks: Log inputs, prompts, outputs, approver, and timestamps; maintain a troubleshooting SOP.

- Data governance: Least‑privilege access to GSC/GA4; no PII to third‑party models; set retention windows; review vendor policies.

- Compliance and YMYL: Mandate SME/legal review for regulated topics; archive approvals with the content record.

- Monitoring and sampling: Spot‑check a weekly sample for tone, accuracy, and intent match; alert on drift.

Codify these into your orchestration layer so guardrails are enforced by default, not remembered ad hoc. That’s the difference between “fast” and “fast, reliable, and defensible.”

Automation for AI search and chat surfaces

Search is no longer just ten blue links. Google’s AI Overviews and chat assistants surface synthesized answers with citations, and inclusion there can drive or deflect clicks. While measurement is messy, you can automate the monitoring and content work that improves your odds of being referenced: detect when AI Overviews appear, harvest the sources they cite, and adapt your pages to match the questions, evidence, and structure these surfaces favor.

- AIO detection and alerting: Use a rank tracker that supports AI Overviews monitoring (e.g., SE Ranking) to flag tracked queries where an Overview appears and note whether your domain is cited.

- Citation harvesting and gap mapping: Capture the domains cited in Overviews and cluster by topic to see which competitors and content types win citations.

- Chat‑ready briefs: Auto‑generate briefs that emphasize concise Q&A sections, step lists, and evidence blocks with sources, so editors can add verifiable claims and author expertise.

- Freshness automations: Track date‑sensitive stats and set review cadences; alert when facts or prices go stale so pages stay citation‑worthy.

- Structured data and authorship: Validate FAQ/HowTo schema, author bios, and page last‑updated fields at scale; queue fixes with human approval.

Track simple KPIs in your dashboard to see progress over time:

AIO_presence_rate = AIO_keywords / tracked_keywords

AIO_citation_share = queries_with_your_citation / AIO_keywords

Pair these with rank and click trends to understand downstream impact.

Security, privacy, and data considerations

Automation connects sensitive systems (Search Console, GA4, your CMS) to third‑party services and LLMs. The upside is speed; the risk is data exposure or unauthorized changes. Treat SEO automation like any other integration program: design for least privilege, transparency, and reversibility before you scale throughput.

Core principles

Start with constraints that reduce blast radius and make every action traceable.

- Least privilege access: Grant read‑only to GSC/GA4 initially; scope CMS/API tokens to staging or specific endpoints.

- Isolate credentials: Use service accounts and secret managers; never hard‑code keys in prompts or scripts.

- Staging before prod: Route automated edits to drafts/queues; require human approval for live changes.

- Rotate and revoke: Enforce token rotation, SSO/MFA, and immediate revocation on role changes.

- Audit everything: Keep run logs (inputs, outputs, approver, timestamps) and alert on failed/abnormal runs.

Vendor due diligence

Know how your data is handled before connecting a tool or model.

- Data processing and retention: Confirm DPAs, data residency options, and default retention/ deletion timelines.

- Model usage: Verify content isn’t used to train models by default; prefer zero‑retention or opt‑out modes.

- Security posture: Look for independent controls (e.g., SOC 2/ISO) and documented incident response SLAs.

- Sub‑processors and exports: Review sub‑processor lists, encryption in transit/at rest, and your ability to export/erase data.

LLM‑specific safeguards

Prompts and outputs can leak more than you think. Put guardrails in the flow, not just in docs.

- No PII or secrets: Don’t send credentials, user IDs, or unpublished URLs to third‑party models; redact logs.

- Source‑of‑truth numbers: Pull metrics from GSC/GA4; forbid LLM‑generated KPIs and require cited sources for facts.

- Host where needed: For stricter control, run parts of the workflow on self‑hosted orchestration (e.g., n8n) and keep sensitive transforms in your environment.

- Rate limits and retries: Add backoff, timeouts, and circuit breakers to avoid bans or partial runs that leave systems inconsistent.

Data governance policies

Write policies the system can enforce automatically.

- Data minimization: Only store what the workflow needs; set TTLs on temp data and caches.

- Access reviews: Quarterly audits of who/what can touch GSC, GA4, and CMS endpoints.

- Backups and rollback: Version content changes and keep a tested rollback plan for automated edits.

- Change management: Annotate dashboards when new automations go live and require approvals for scope changes.

Build these controls once and reuse them across flows. You’ll ship faster with a smaller risk surface—and you’ll have the logs and levers to act quickly if something goes wrong.

Costs, pricing models, and ROI math

Budgeting for SEO automation is about stacking small, predictable subscriptions against the hours you reclaim. Most tools are affordable solo, but costs add up—so choose the minimum set that removes your biggest bottlenecks and prove payback fast.

- Freemium/tiered SaaS: Looker Studio (free), ChatGPT Plus ($20/mo), n8n (from $24/mo), Page Optimizer Pro (from $37/mo), Gumloop (free, then from $37/mo), SE Ranking (from $65/mo), Surfer AI (from $99/mo), Alli AI (from $169/mo), Clearscope (from $189/mo).

- Per‑site/credit models: LowFruits (from $29.99/mo), SEO Stack (from £49.99/mo), Relume (from $26/user/mo).

- All‑in‑one suite: RankYak is $99/mo for all features with a 3‑day free trial.

Total cost of ownership (TCO) includes more than licenses. Expect one‑time setup and workflow build hours, light maintenance (prompt updates, connectors), and editorial QA time for human approvals. Keep these visible in your ROI math.

-

Core formulas

Monthly savings ($) = hours_saved_per_month * blended_hourly_rateNet ROI (%) = ((monthly_savings - monthly_tool_cost) / monthly_tool_cost) * 100Payback (months) = setup_hours * hourly_rate / monthly_net_gain

-

Example

- Save 12 hrs/mo on reporting, audits, and briefs at $60/hr =

$720. - Stack: RankYak ($99) + SE Ranking ($65) + Page Optimizer Pro ($37) ≈

$201/mo. Monthly net gain = 720 - 201 = $519ROI ≈ (519 / 201) * 100 ≈ 258%- If setup was 8 hrs,

Payback ≈ (8*60)/519 ≈ 0.92 months.

- Save 12 hrs/mo on reporting, audits, and briefs at $60/hr =

Instrument these numbers in your dashboard so finance sees hours saved and cash impact alongside traffic and rankings.

Frequently asked questions

Below are quick answers teams ask when they first consider SEO automation. Use them as guardrails: automate the repeatable, keep judgment work human, and measure results against a clear baseline so you know what’s working and what to tune.

- What is SEO automation? Using software and AI to run repetitive SEO tasks (reports, audits, rank tracking, clustering, briefs) on a schedule so humans can focus on strategy and editing.

- Which tasks are safe to automate? Reporting, rank tracking/alerts, recurring crawls, keyword clustering, SERP-to-brief drafts, on‑page recommendations with approval, internal linking suggestions, and content decay detection.

- What should stay human-led? Strategy and prioritization, final keyword selection, SME/thought leadership content, compliance reviews, and high‑impact technical changes.

- Will automation hurt quality or rankings? It can if unreviewed AI text or bad data ships. Protect outputs with approval gates, style guides, and source KPIs from systems of record (Search Console, GA4).

- How do I estimate ROI? Compare tool cost to time saved:

hours_saved * hourly_rate, and track payback months. Baseline 30–90 days, then remeasure post‑automation. - Do I need developers? Not necessarily. No/low‑code agent builders (e.g., Gumloop, n8n) let marketers orchestrate flows with prompts, connectors, and approvals.

- How fast will I see impact? Operational wins (time saved, cycle time) show within 30 days; ranking and traffic gains follow as you ship consistently and fix issues faster.

Final thoughts

SEO automation is a force multiplier, not a replacement for strategy. Wire up a few reliable workflows, keep humans in the approval loop, and let software handle the repetitive lifts. Start with reporting, audits, rank tracking, and SERP‑to‑briefs; measure cycle time and hours saved; then expand into internal linking and content decay detection. With guardrails and clear KPIs, you’ll ship more, fix faster, and protect quality.

If you want an end‑to‑end loop without stitching tools, an all‑in‑one can get you there faster. RankYak automates keyword discovery, daily content plans, SEO‑optimized drafts, auto‑publishing, and backlink building in one place—built for lean teams that need consistent results. There’s a 3‑day free trial, so you can baseline, turn on automations, and see impact before you commit.

Pick one flow, automate it this week, and review the numbers in 30 days. That’s how you move from busywork to compounding SEO gains—on purpose and on schedule.

Get Google and ChatGPT traffic on autopilot.

Start today and generate your first article within 15 minutes.