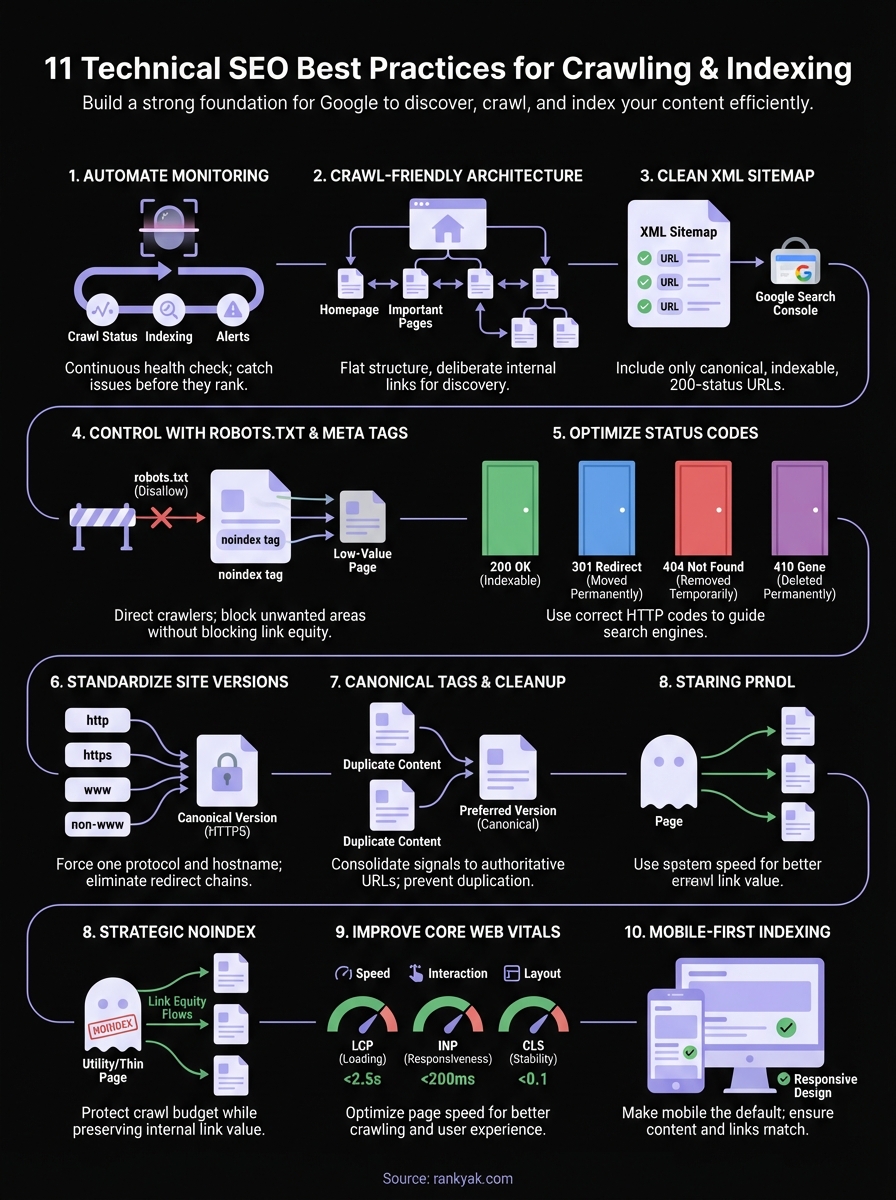

11 Technical SEO Best Practices for Crawling & Indexing

You can publish the best content on the internet, but if search engines can't crawl and index your pages properly, that content won't rank. Technical SEO best practices form the foundation that makes all your other optimization efforts work. Without them, you're essentially building a house on sand.

Here's the reality: most websites have technical issues they don't even know about. Broken links, slow page speeds, crawl budget waste, and indexing problems silently kill your rankings while you wonder why traffic isn't growing. The good news? These issues are fixable, once you know what to look for.

This guide covers 11 essential practices that directly impact how Google discovers, crawls, and indexes your site. At RankYak, we've built these principles into our automated content publishing system because we know that even perfectly optimized articles won't perform if the technical foundation is broken. Whether you're managing one site or dozens, these actionable steps will help you eliminate the hidden barriers standing between your content and higher rankings.

1. Monitor technical SEO automatically with RankYak

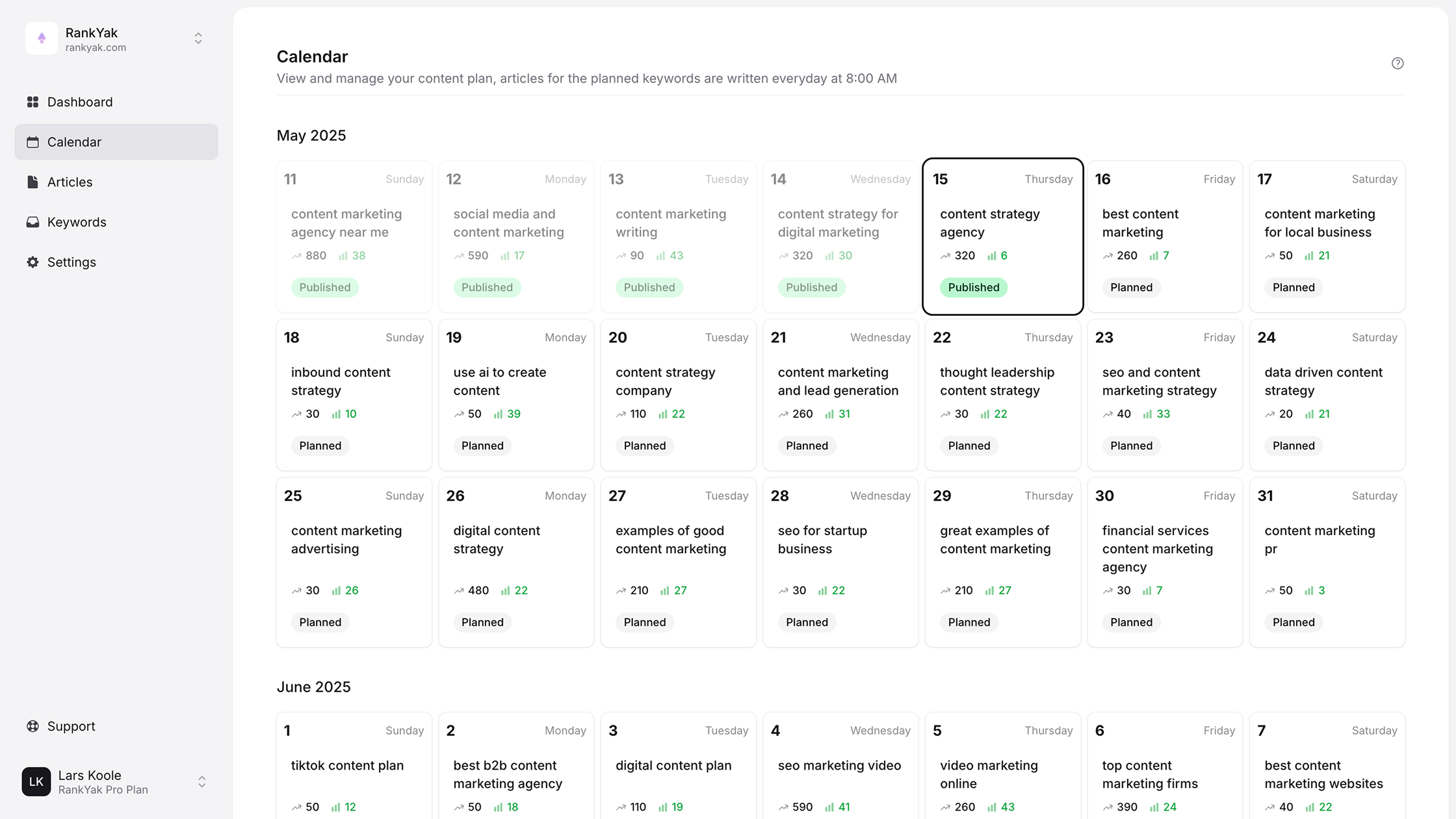

You can't fix what you don't measure. Manual technical audits take hours to complete and become outdated the moment you publish new content or make site changes. RankYak solves this by continuously monitoring your site's technical health as it publishes fresh content daily, catching crawlability and indexing problems before they hurt your rankings.

When you automate both content creation and technical monitoring, you eliminate the lag between publishing and detection. Most platforms force you to choose between speed and quality, but RankYak's approach means every new article gets checked for technical issues as part of the publishing workflow, not weeks later during a manual audit.

Set up a baseline crawl and indexing snapshot

RankYak creates an initial technical foundation the moment you connect your site. This baseline captures your current crawl status, indexing state, and site structure so you can measure improvements over time. You'll see which pages are indexed, which face crawl errors, and where your site architecture needs work.

The baseline also identifies existing technical debt like broken links, redirect chains, and orphaned pages that manual checks often miss. You get a clear starting point that shows exactly where your site stands before any automated content gets published.

Track changes that impact crawlability and indexing over time

Continuous monitoring means you spot problems immediately instead of discovering them during quarterly audits. Every time RankYak publishes a new article to your site, it checks that the page is crawlable, indexable, and properly integrated into your site architecture.

RankYak automatically validates that new content follows technical SEO best practices, from proper canonical tags to clean URL structures, so you never accidentally publish pages that waste crawl budget.

You receive alerts when something breaks, whether it's a plugin conflict that blocks indexing or a site update that creates redirect loops. This real-time feedback keeps your technical foundation strong as your content library grows.

Catch issues early as you publish new content at scale

Publishing daily means technical problems compound fast if left unchecked. A single misconfigured setting can noindex dozens of articles before you notice. RankYak's integrated monitoring catches these configuration errors the moment they appear, not after they've damaged your rankings.

This approach is especially valuable when managing multiple sites under one account. You can't manually audit five or ten sites every day, but RankYak handles the technical validation across your entire portfolio automatically. Each site gets its own monitoring stream, so you always know which properties need attention.

2. Build a crawl-friendly site architecture and internal links

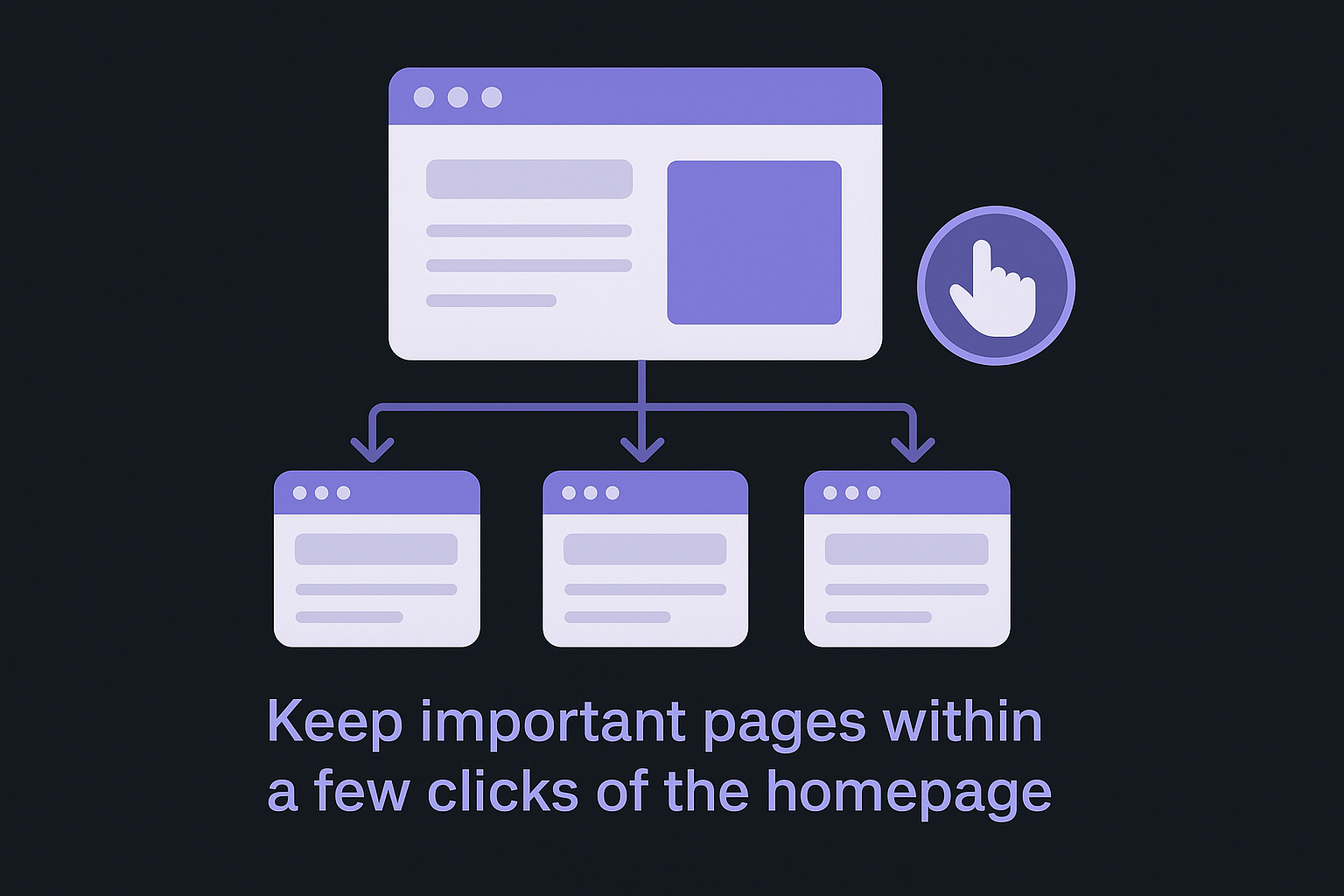

Your site's structure determines how easily search engines discover and crawl your content. A flat architecture that keeps important pages close to the homepage helps Google understand which content matters most. When you organize content logically and link pages together deliberately, you create pathways that guide crawlers through your site efficiently instead of forcing them to waste resources on dead ends.

Poor architecture creates crawlability problems that no amount of content optimization can fix. Pages buried six or seven clicks deep rarely get crawled frequently, and orphaned pages without internal links might never get discovered at all. Following technical SEO best practices for site structure means treating your internal linking strategy as seriously as your external backlink efforts.

Keep important pages within a few clicks of the homepage

Google crawls your homepage more frequently than any other page on your site. When you place high-priority content within three clicks of the homepage, you signal its importance and ensure crawlers reach it quickly. This flat structure beats deep hierarchies that bury valuable pages under multiple category and subcategory layers.

Navigation menus, footer links, and homepage feature sections all provide direct pathways to important content. You should audit your site's click depth regularly to identify pages that deserve better placement in your architecture.

Eliminate orphan pages with deliberate internal linking

Orphan pages have zero internal links pointing to them, making them nearly impossible for crawlers to discover through normal site navigation. These pages waste your crawl budget and often don't get indexed despite being published. You need to connect every page to at least one other page through contextual internal links.

Strategic internal linking distributes crawl equity throughout your site, helping new content get discovered and indexed faster while reinforcing the authority of your most important pages.

Use consistent, descriptive URL paths and navigation

Clean URL structures help crawlers understand your site's organization at a glance. Descriptive paths like /blog/technical-seo-guide communicate more information than /p=12345, making it easier for Google to categorize and index your content correctly. Consistency matters because changing URL patterns creates confusion and tracking difficulties.

Your navigation should mirror your URL structure, creating a logical hierarchy that both users and crawlers can follow. Avoid JavaScript-dependent navigation that blocks crawlers from discovering linked pages.

3. Create and submit an XML sitemap that search engines trust

Your XML sitemap acts as a roadmap for search engines, telling them which pages exist on your site and how often they change. A well-constructed sitemap helps Google discover and index your content faster, especially new articles that might not have many internal links yet. When you follow technical SEO best practices for sitemap creation, you eliminate confusion about which pages should appear in search results and which should stay hidden.

Many sites create sitemaps that hurt more than help by including wrong URLs or outdated pages. You need to treat your sitemap as a curated list of your best, most indexable content rather than dumping every URL into an XML file and hoping for the best.

Include only canonical, indexable, 200-status URLs

Your sitemap should contain only pages you want crawled and indexed. This means excluding URLs that return 404 errors, redirect to other pages, or carry noindex tags. Every URL in your sitemap must return a 200 status code and represent the canonical version of that content.

Check that you're not listing parameter variations, pagination pages, or duplicate content that should point to canonical URLs instead. Google wastes crawl budget when your sitemap sends them to pages that shouldn't be indexed.

Keep sitemap freshness high as your site changes

Update your sitemap automatically whenever you publish new content or remove old pages. Static sitemaps that never change tell search engines you're not actively maintaining your site, which can slow down how quickly your new articles get discovered and indexed.

Fresh sitemaps signal to Google that your site is active and worth crawling frequently, which helps your latest content get indexed faster and ranked sooner.

Submit and validate in Google Search Console

Upload your sitemap to Google Search Console and monitor it for errors regularly. The sitemap report shows you exactly which URLs Google successfully indexed versus which ones encountered problems. You'll catch issues like blocked resources or redirect chains before they impact your rankings.

4. Control crawling with robots.txt and robots meta tags

Your robots.txt file and meta robots tags give you direct control over which pages search engines can crawl and index. These directives form a critical part of technical seo best practices because they protect your crawl budget from being wasted on pages that shouldn't rank. When configured correctly, they guide crawlers toward your valuable content while keeping administrative pages, duplicate content, and other low-value areas out of search results.

Mistakes with robots directives can accidentally block your entire site from being indexed or create conflicts that confuse search engines about your intentions. You need to audit these settings regularly to ensure they're helping rather than hurting your visibility.

Allow crawling of pages you want indexed

Check that your robots.txt file doesn't block important sections of your site like blog posts, product pages, or service descriptions. Common mistakes include blocking entire directories that contain indexable content or using overly broad disallow rules that catch more than intended. Your robots.txt should primarily focus on blocking truly unwanted areas rather than trying to manage everything through blanket restrictions.

Block low-value areas without blocking essential assets

Use robots.txt to prevent crawlers from wasting time on admin panels, search result pages, thank-you pages, and cart functionality that shouldn't appear in search results. However, you must avoid blocking CSS, JavaScript, or image files that Google needs to properly render your pages for mobile-first indexing.

Blocking critical rendering resources in robots.txt prevents Google from understanding your page layout and content, which can hurt your rankings even if the HTML is crawlable.

Avoid accidental noindex and disallow conflicts

Never use both robots.txt disallow and noindex meta tags on the same page. When you block a page in robots.txt, crawlers can't reach it to read the noindex tag, which creates indexing confusion. If you want a page excluded from search results, choose one method: either block it in robots.txt or allow crawling but add a noindex meta tag to the page itself.

5. Fix indexability issues with proper status codes

Search engines rely on HTTP status codes to determine which pages should be indexed and how to handle pages that have moved or disappeared. When your server returns incorrect status codes, you create indexability problems that prevent Google from properly cataloging your content. Following technical seo best practices for status codes means ensuring every page communicates its actual state accurately, whether that's successfully loaded, permanently moved, or truly gone.

Return 200, 301, 404, and 410 status codes correctly

Your live, indexable pages must return 200 status codes to signal that the content loaded successfully and should be crawled. Permanently redirected pages need 301 status codes that pass link equity to the new location. Pages that no longer exist should return 404 codes for temporary removal or 410 codes to indicate permanent deletion. Mixing these up confuses search engines about which pages deserve ranking consideration versus which should be removed from the index entirely.

Resolve soft 404s and error pages that return 200

Soft 404s happen when your server returns a 200 status code for pages that should actually show errors. These pages display "not found" messages to users but tell Google the page exists and should be indexed. You need to configure your server to return proper 404 codes when content doesn't exist instead of rendering custom error pages with successful status codes.

Fixing soft 404s stops Google from wasting crawl budget on pages that have no value, allowing crawlers to focus on your actual content instead.

Find and fix broken internal links and broken pages

Audit your site regularly for internal links pointing to pages that return 404 errors. Broken links trap crawlers in dead ends and waste the crawl equity you could be passing to working pages. Update or remove broken links, and consider implementing 301 redirects from dead URLs that still receive traffic or backlinks to relevant replacement content.

6. Standardize your preferred site version and redirects

Inconsistent site versions create duplicate content problems that split your authority across multiple URLs pointing to identical content. When your site responds to http, https, www, and non-www versions without forcing one canonical version, search engines must decide which to index, and they might choose differently for different pages. Part of following technical seo best practices means eliminating these variations so all your ranking signals consolidate into a single, authoritative version of your site.

Force one hostname and protocol version

Choose whether your site will use www or non-www and http or https (always choose https for security), then configure your server to redirect all other variations to your preferred version. You should set up 301 redirects that automatically send visitors and crawlers from http://www.example.com, http://example.com, and https://www.example.com to your chosen version like https://example.com. This ensures Google sees only one version worth indexing.

Clean up redirect chains and loops

Redirect chains happen when one URL redirects to another that redirects to yet another before reaching the final destination. These chains waste crawl budget and can cause Google to give up before reaching your actual content. Audit your redirects to ensure each old URL points directly to the final landing page in a single hop. Also check for redirect loops where pages redirect to each other endlessly, which completely blocks indexing.

Eliminating redirect chains and loops improves crawl efficiency and ensures link equity flows directly to your target pages instead of being diluted through multiple hops.

Keep trailing slash and lowercase rules consistent

Decide whether your URLs will use trailing slashes (/page/ versus /page) and stick to one format across your entire site. Apply the same consistency to uppercase versus lowercase characters in URLs. Configure your server to redirect variants to your chosen standard so you don't create duplicate versions of the same content at slightly different URLs.

7. Prevent duplicate content with canonical tags and cleanup

Duplicate content dilutes your ranking potential by splitting authority across multiple URLs that display identical or near-identical information. Search engines struggle to decide which version deserves to rank, often choosing the wrong one or showing none of them prominently. Implementing canonical tags correctly and cleaning up duplicate content issues represents one of the most impactful technical seo best practices because it consolidates all your ranking signals into single, authoritative URLs.

Identify the most common causes of duplication

Your site creates duplicates through URL parameters (like sorting and filtering options), session IDs, tracking codes, and printer-friendly versions of pages. Product pages that appear under multiple category paths, HTTP versus HTTPS versions, and www versus non-www variants all generate duplicate content. You also face duplication from pagination, AMP versions, and syndicated content that appears on both your site and partner sites.

Implement rel canonical the right way

Add canonical tags to the HTML head of every page, pointing to the preferred version you want indexed. Self-referencing canonicals on your main pages reinforce that they're the authoritative versions, while variant pages should canonicalize to the original. Your canonical URL must return a 200 status code and be indexable without noindex tags or robots.txt blocks.

Canonical tags pass most link equity to the preferred URL while telling search engines which version to show in results, effectively solving duplicate content problems without losing valuable backlinks.

Decide when to merge, redirect, or keep variants

Merge nearly identical pages into comprehensive resources when content overlap serves no purpose. Use 301 redirects from duplicate URLs to canonical versions when pages truly duplicate each other. Keep legitimate variants only when they serve different user needs, like regional versions or product variations, and use proper hreflang tags or canonicalization to clarify the relationship between them.

8. Use noindex strategically to protect crawl budget

The noindex directive stops pages from appearing in search results while still allowing crawlers to access and follow their links. This becomes one of the most powerful technical seo best practices when you need to prevent low-value pages from consuming crawl budget while maintaining your internal linking structure. Unlike robots.txt blocks, noindexed pages can still pass link equity through outbound links, making them useful for pages that serve navigational purposes but shouldn't rank.

Decide what should never appear in search results

Apply noindex tags to pages that users need but search engines shouldn't show, like login pages, account dashboards, checkout steps, and internal search results. Your thank-you pages, confirmation screens, and filtered product views that create near-duplicates also belong in this category. These pages waste crawl resources when indexed because they provide no value to searchers arriving from Google.

Noindex thin, duplicate, and utility pages without harming key pages

Tag pages, archive pages, and author pages with minimal unique content often deserve noindex treatment to prevent thin content penalties. Your site's utility pages like terms of service, privacy policies, and disclaimer pages rarely need to rank but must remain accessible. Be careful not to noindex pages that contain valuable content or serve as important hubs in your site architecture.

Strategic noindexing protects your crawl budget by ensuring Google spends time on pages that can actually drive traffic rather than indexing pages that will never rank well.

Validate indexation with URL inspections and site queries

Use Google Search Console's URL Inspection tool to confirm that your noindex tags are working correctly and that pages are excluded from the index as intended. Run site:yourdomain.com searches to spot indexed pages that should carry noindex directives but don't. Check regularly that you haven't accidentally noindexed important content during site updates or theme changes.

9. Improve page speed and Core Web Vitals for better crawling

Page speed directly impacts how efficiently Google crawls your site and how well your content ranks. Slow pages force crawlers to spend more time on each URL, which means they crawl fewer pages during each visit and discover your new content more slowly. When you optimize Core Web Vitals as part of technical seo best practices, you improve both user experience and crawl efficiency, creating a foundation that supports better rankings across your entire site.

Prioritize Largest Contentful Paint, Interaction to Next Paint, and CLS

Focus on the three core metrics that Google measures: Largest Contentful Paint (how quickly your main content loads), Interaction to Next Paint (how fast your page responds to user interactions), and Cumulative Layout Shift (how stable your layout remains during loading). Your LCP should happen within 2.5 seconds, INP below 200 milliseconds, and CLS under 0.1 to pass Google's thresholds. Test your pages using PageSpeed Insights to identify which metrics need work.

Fast Core Web Vitals scores signal to Google that your pages deliver excellent user experiences, which influences both your crawl priority and ranking potential.

Reduce heavy scripts, unused CSS, and render-blocking resources

Audit your site for JavaScript files and CSS stylesheets that block your page from rendering quickly. Remove unused code, defer non-critical scripts, and inline critical CSS to speed up initial rendering. Third-party scripts like analytics trackers and social media widgets often cause the biggest slowdowns.

Optimize images, caching, compression, and CDN delivery

Compress and resize your images to appropriate dimensions instead of serving full-resolution files that browsers must scale down. Enable browser caching so returning visitors don't reload static assets on every visit. Use gzip or brotli compression to reduce file transfer sizes, and consider a content delivery network to serve resources from servers closer to your users.

10. Make mobile the default for rendering and indexing

Google switched to mobile-first indexing years ago, which means the search engine uses your mobile version to determine rankings even for desktop searches. When your mobile experience falls short or differs significantly from your desktop version, you risk losing rankings across all devices. Treating mobile as an afterthought instead of the primary experience represents one of the most damaging violations of technical seo best practices you can commit in 2026.

Confirm responsive layouts and viewport configuration

Your site needs a responsive design that adapts seamlessly to different screen sizes rather than serving separate mobile URLs or requiring horizontal scrolling. Add the viewport meta tag (<meta name="viewport" content="width=device-width, initial-scale=1">) to every page so browsers know to scale your content appropriately. Test your pages on actual mobile devices to catch layout problems that desktop testing tools might miss.

Avoid mobile-only content gaps and hidden critical elements

Check that your mobile version displays the same core content as your desktop site. Google can't index text or images that you hide on mobile devices, which creates indexing gaps that hurt your rankings. Your mobile navigation should provide access to all important pages without burying them behind multiple menu layers or hamburger menus that obscure site structure.

When you hide content on mobile that appears on desktop, Google's mobile-first indexing misses that information entirely, treating it as if it doesn't exist on your site.

Fix mobile usability issues that block engagement and indexing

Run your pages through Google's Mobile-Friendly Test to identify problems like text that's too small, clickable elements placed too close together, or content wider than the screen. Your touch targets need sufficient spacing so users can tap buttons without accidentally hitting adjacent links. Address these issues in Google Search Console's Mobile Usability report before they impact your rankings.

Next steps

You now have 11 actionable technical seo best practices that directly impact how search engines crawl and index your content. Each practice addresses a specific weakness that holds sites back from ranking, from crawl budget waste to mobile usability failures. The challenge isn't knowing what to do; it's finding time to implement and maintain these optimizations while publishing content consistently.

Manual technical audits consume hours you could spend growing your business. You check status codes, validate sitemaps, fix broken links, and monitor Core Web Vitals, only to repeat the process next month when your site changes. This reactive approach means problems damage your rankings before you catch them.

RankYak eliminates this cycle by automating both content creation and technical monitoring. Your site gets fresh, optimized articles every day while the platform validates crawlability, indexing status, and site structure automatically. You focus on strategy while RankYak handles the technical foundation that makes content rank.

Get Google and ChatGPT traffic on autopilot.

Start today and generate your first article within 15 minutes.